Get data

This section describes how to download air monitoring and modeling data.

Air monitoring data

- Final data

- Preliminary data

- Air Monitoring Site Information

Summarized air monitoring results

- External Data Explorers

- Internal Data Explorers

- 2.1.14 MPCA Tableau Server

Health benchmarks and air quality standards

- Air toxics

- 2.2.1 Inhalation health benchmarks

- Criteria pollutants

- 2.2.2 Air Quality Standards (NAAQs and MAAQS)

Air modeling

Context

- Emissions

- Meteorology and Climate

- Geography and Census data

2.1 Air monitoring

2.1.1 Retrieving data from AQS

The Air Quality System (AQS) contains ambient air pollution data collected by EPA, state, local, and tribal air pollution control agencies from over thousands of monitors. AQS also contains meteorological data, descriptive information about each monitoring station (including its geographic location and its operator), and data quality assurance/quality control information.

Registered users can access the AQS database via a web application at https://www.epa.gov/aqs. Raw data extracts can be run using the AMP501 report. The AMP501 provides data in the pipe delimited RD transaction format.

2.1.2 Retrieving data from AQS API

The AQS API provides access to air quality data stored in the EPA’s AQS database and is open to the public. AQS API

Note: The AQS API requires a user name and password. The username and password is not the same as your AQS User Account. To request an API account, follow the instructions on the documentation page.

Sample R script

Click the button below for code for a shiny app to access the AQS API.

library(httr)

library(jsonlite)

library(data.table)

library(lubridate)

library(stringr)

library(tidyverse)

library(readxl)

library(glue)

library(shiny)

library(shinyWidgets)

library(shinyjs)

library(shinycssloaders)

library(shinyBS)

library(plotly)

library(DT)

library(sf)

library(sp)

library(sampSurf)

library(swfscMisc)

library(leaflet)

library(leaflet.extras)

library(tigris)

library(htmltools)

service_opts <- c("sampleData", "dailyData", "annualData", "qaBlanks",

"qaCollocatedAssessments", "qaFlowRateVerifications", "qaFlowRateAudits", "qaOnePointQcRawData", "qaPepAudits") %>%

setNames(c("Sample data", "Daily data", "Annual data", "QA Blanks", "QA Collocated Assessments", "QA Flow Rate Verifactions", "QA Flow Rate Audits",

"QA One Point QC Raw Data", "QA PEP Audits"))

g <- list(

scope = 'usa',

projection = list(type = 'albers usa'),

showland = TRUE,

landcolor = toRGB("gray95"),

subunitcolor = toRGB("gray85"),

countrycolor = toRGB("gray85"),

countrywidth = 0.5,

subunitwidth = 0.5

)

ui <- fluidPage(

useShinyjs(),

tags$style(type='text/css', "nav.navbar.navbar-default.navbar-static-top{border-color: #f5f5f5;background-color: #f5f5f5;}"),

tags$style(type='text/css', ".navbar{min-height: 0px; margin-bottom: 0px;}"),

tags$style(type='text/css', ".navbar-brand{height: 0px; padding: 0px 0px;}"),

navbarPage

(

title = NULL, id = "navBar",

tabPanel

(

title = "01", value = "panel1",

h1("Log in or sign up for AQS API key", align = "center"),

textInput("email", "Email", ""),

fluidRow(column(2, passwordInput("key", "Key", "")),

column(8, br(),

dropMenu(

actionButton("key_info", "", icon = icon('info')),

tags$div(

span("This is your AQS API key. If you do not have one or need to reset it, enter your email and click Sign up for API key (check your email in a few minutes)."),

),

placement = "right",

arrow = TRUE))),

fluidRow(column(2, actionButton("auth", "Connect")),

column(2, actionButton("signup", "Sign up for API key")),

#column(4, br(), textOutput("authText"))

)

),

tabPanel

(

title = "02", value = "panel2",

actionButton("go_back1", "Back"),

h1("Select service and selection method", align = "center"),

fluidRow(column(2, pickerInput("service", label = "Select service", choices = service_opts)),

column(2, actionButton("by_state", "Select by state")),

column(2, actionButton("by_county", "Select by county")),

column(2, actionButton("by_site", "Select by site"))

)

),

tabPanel (

title = "03", value = "panel3",

actionButton("go_back2", "Back"),

h1('Select one or more states by clicking, drawing shapes, or using the list at the bottom', align = "center"),

leafletOutput("state_map"),

fluidRow(

column(3, pickerInput("states", label = "Select states", choices = "", multiple = T,

options = list(`actions-box` = TRUE, `live-search`=TRUE))),

column(3, pickerInput("parm_class", label = "Select parameter class", choices = "CRITERIA",

selected = "CRITERIA",

options = list(`live-search` = TRUE))),

column(3, pickerInput("parms", label = "Select parameters", choices = "", multiple = T,

options = list(`actions-box` = TRUE, `live-search`=TRUE))),

column(3, dateRangeInput("date_range", "Select date range", today(), today()))

),

fluidRow(

actionButton("get_data", "Get Data"),

actionButton("select_cnty", "Select Counties"),

actionButton("next_map", "Select sites")

)

),

tabPanel (

title = "04", value = "panel4",

actionButton("go_back3", "Back"),

h1("Select one or more counties", align = "center"),

leafletOutput("cnty_map"),

fluidRow(

column(3, pickerInput("cntys", label = "Select counties", choices = "", multiple = T,

options = list(`actions-box` = TRUE, `live-search`=TRUE))),

column(3, actionButton("get_data2", "Get Data"))

)

),

tabPanel (

title = "05", value = "panel5",

actionButton("go_back4", "Back"),

h1("Select one or more monitors", align = "center"),

leafletOutput("site_map"),

fluidRow(

column(3, pickerInput("site_select", label = "Select sites", choices = "", multiple = T,

options = list(`actions-box` = TRUE, `live-search`=TRUE))),

column(3, actionButton("get_data3", "Get Data"))

)

),

tabPanel (

title = "06", value = "panel6",

actionButton("go_back5", "Back"),

tabsetPanel(

tabPanel("View data",

fluidRow(column(1, downloadButton("downloadData", "Download")),

column(11, withSpinner(dataTableOutput("table"), type = 1))

)

)

)

)

)

)

server <- function(input, output, session) {

v <- reactiveValues(geo_select = c(),

selected_states = c(),

selected_cntys = c(),

selected_sites = c(),

state_polys = NULL,

cnty_polys = NULL,

site_polys = NULL,

selected_classes = "CRITERIA",

selected_parms = NULL,

selected_dates = list(ymd(20190101), ymd(20190101)),

data = data.table()

)

observeEvent(input$signup,{

req(input$email)

check_auth <<- GET(glue("https://aqs.epa.gov/data/api/signup?email={input$email}"))$content %>%

rawToChar() %>% fromJSON() %>% pluck("Data")

}, ignoreInit = T)

observeEvent(state_list(), {

hide(selector = "#navBar li a[data-value=panel1]")

shinyjs::show(selector = "#navBar li a[data-value=panel2]")

updateNavbarPage(session, "navBar", selected="panel2")

})

observeEvent(input$by_state, v$geo_select <- "State")

observeEvent(input$by_county, v$geo_select <- "County")

observeEvent(input$by_site, v$geo_select <- "Site")

state_list <- reactive({

if(input$auth == 0) return()

isolate({

json_states <<- rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/list/states?email={input$email}&key={input$key}"

),

encode = "json"

)$content)

states <- if (!str_detect(json_states, '\\"status\\": \\"Success\\"'))

NULL else

fromJSON(json_states, simplifyDataFrame = T)$Data %>%

setDT()

check3 <<- states

})

})

observeEvent(list(input$by_state, input$by_county, input$by_site), {

shiny::validate(need(state_list(), label = "state_list"))

updatePickerInput(session = session, inputId = "states",

choices = state_list()[, code %>% setNames(paste0(code, ": ", value_represented))],

selected = v$selected_states

)

}, priority = -1)

state_data <- reactive(states(cb = TRUE))

cnty_data <- reactive({

input$select_cnty

isolate({

shiny::validate(need(v$selected_states, label = "states"))

counties(state = v$selected_states)})

})

observeEvent(input$states, v$selected_states <- input$states, ignoreNULL = F)

observeEvent(input$state_map_shape_click,{

clicked <- input$state_map_shape_click$id %>% str_extract("\\d+") %>% str_pad(2, "left", 0)

if(clicked %in% v$selected_states) {

v$selected_states <- v$selected_states[!(v$selected_states %in% clicked)]

} else {

v$selected_states <- c(v$selected_states, clicked)

}

})

observeEvent(input$state_map_draw_new_feature,{

v$state_polys <- c(v$state_polys, list(input$state_map_draw_new_feature)) %>%

set_names(purrr::map(., ~.x$properties$`_leaflet_id`))

})

observeEvent(input$state_map_draw_deleted_features,{

remove_polys <- map_int(1:length(input$state_map_draw_deleted_features$features),

~pluck(input$state_map_draw_deleted_features, "features", .x, "properties", "_leaflet_id"))

v$state_polys <- v$state_polys[!(names(v$state_polys) %in% as.character(remove_polys))]

})

observeEvent(v$state_polys, {

if(!length(v$state_polys)) {

v$selected_states <- NULL

return()

}

polys <- purrr::map(v$state_polys, ~if(.x$properties$feature_type == "circle") {

.x$geometry$coordinates %>% unlist() %>%

{circle.polygon(.[1], .[2], .x$properties$radius / 1000, sides = 1000, by.length = F,

units = "km", poly.type = "gc.earth")} %>%

Polygon()

} else {

.x$geometry$coordinates[[1]] %>% rbindlist() %>% Polygon()

})

polys <- polys %>% imap(~Polygons(list(.x), .y)) %>% SpatialPolygons() %>% st_as_sf()

st_crs(polys) <- "WGS84"

polys <- st_transform(polys, "NAD83")

state_data1 <<- state_data()

v$selected_states <- st_intersection(state_data(), polys) %>% pull(GEOID)

check5 <- v$selected_states

})

observeEvent(v$selected_states, {

if(is.null(state_list())) return()

check1 <<- state_data()

check2 <<- v$selected_states

selected <<- subset(state_data(), STATEFP %in% v$selected_states)

not_selected <<- subset(state_data(), !(STATEFP %in% v$selected_states))

proxy <- leafletProxy("state_map", session)

if(nrow(selected) > 0) {proxy %>%

addPolygons(

data = selected,

fillColor = "blue",

color = "black",

label = ~selected$NAME,

layerId = ~paste(selected$STATEFP, "selected"),

options = pathOptions(pane = "selected_states")

)

}

proxy %>% removeShape(layerId = paste(not_selected$STATEFP, "selected"))

updatePickerInput(session = session, inputId = "states",

choices = state_list()[, code %>% setNames(paste0(code, ": ", value_represented))],

selected = v$selected_states)

}, ignoreNULL = F)

observeEvent(input$cntys, v$selected_cntys <- input$cntys, ignoreNULL = F)

observeEvent(input$cnty_map_shape_click,{

clicked <<- input$cnty_map_shape_click$id %>% str_extract("\\d+") %>% str_pad(3, "left", 0)

if(clicked %in% v$selected_cntys) {

v$selected_cntys <- v$selected_cntys[!(v$selected_cntys %in% clicked)]

} else {

v$selected_cntys <- c(v$selected_cntys, clicked)

}

})

observeEvent(input$cnty_map_draw_new_feature,{

v$cnty_polys <- c(v$cnty_polys, list(input$cnty_map_draw_new_feature)) %>%

set_names(purrr::map(., ~.x$properties$`_leaflet_id`))

})

observeEvent(input$cnty_map_draw_deleted_features,{

remove_polys <- map_int(1:length(input$cnty_map_draw_deleted_features$features),

~pluck(input$cnty_map_draw_deleted_features, "features", .x, "properties", "_leaflet_id"))

v$cnty_polys <- v$cnty_polys[!(names(v$cnty_polys) %in% as.character(remove_polys))]

})

observeEvent(v$cnty_polys, {

if(!length(v$cnty_polys)) {

v$selected_cntys <- NULL

return()

}

polys <- purrr::map(v$cnty_polys, ~if(.x$properties$feature_type == "circle") {

.x$geometry$coordinates %>% unlist() %>%

{circle.polygon(.[1], .[2], .x$properties$radius / 1000, sides = 1000, by.length = F,

units = "km", poly.type = "gc.earth")} %>%

Polygon()

} else {

.x$geometry$coordinates[[1]] %>% rbindlist() %>% Polygon()

})

polys <- polys %>% imap(~Polygons(list(.x), .y)) %>% SpatialPolygons() %>% st_as_sf()

st_crs(polys) <- "WGS84"

polys <- st_transform(polys, "NAD83")

cnty_data1 <<- cnty_data()

v$selected_cntys <- st_intersection(cnty_data(), polys) %>% pull(GEOID)

check5 <- v$selected_cntys

})

observeEvent(v$selected_cntys, {

if(is.null(cnty_data())) return()

check1 <<- cnty_data()

check2 <<- v$selected_cntys

selected <<- subset(cnty_data(), GEOID %in% v$selected_cntys)

not_selected <<- subset(cnty_data(), !(GEOID %in% v$selected_cntys))

proxy <- leafletProxy("cnty_map", session)

if(nrow(selected) > 0) {proxy %>%

addPolygons(

data = selected,

fillColor = "blue",

color = "black",

label = ~selected$NAME,

layerId = ~paste(selected$GEOID, "selected"),

options = pathOptions(pane = "base_cntys")

)

}

proxy %>% removeShape(layerId = paste(not_selected$GEOID, "selected"))

updatePickerInput(session = session, inputId = "cntys",

choices = cnty_data()$GEOID %>% setNames(paste0(cnty_data()$GEOID, ": ", cnty_data()$NAME)),

selected = v$selected_cntys)

}, ignoreNULL = F)

observeEvent(input$site_select, v$selected_sites <- input$site_select, ignoreNULL = F)

observeEvent(list(input$by_state, input$by_county, input$by_site), {

v$state_polys <- NULL

v$selected_cntys <- NULL

v$selected_sites <- NULL

if(input$by_state | input$by_county | input$by_site) {

hide(selector = "#navBar li a[data-value=panel2]")

shinyjs::show(selector = "#navBar li a[data-value=panel3]")

updateNavbarPage(session, "navBar", selected="panel3")

}

}, priority = -2)

observeEvent(req(input$select_cnty), {

updatePickerInput(session = session, inputId = "cntys",

choices = cnty_data()$GEOID %>% setNames(paste0(cnty_data()$GEOID, ": ", cnty_data()$NAME)),

selected = NULL)

hide(selector = "#navBar li a[data-value=panel3]")

shinyjs::show(selector = "#navBar li a[data-value=panel4]")

updateNavbarPage(session, "navBar", selected="panel4")

})

observeEvent(req(input$next_map), {

updatePickerInput(session = session, inputId = "site_select",

choices = site_list()$siteid %>% setNames(paste0(site_list()$siteid, ": ", site_list()$local_site_name)),

selected = NULL)

hide(selector = "#navBar li a[data-value=panel3]")

shinyjs::show(selector = "#navBar li a[data-value=panel5]")

updateNavbarPage(session, "navBar", selected="panel5")

})

observeEvent(input$parm_class, v$selected_classes <- input$parm_class, ignoreNULL = T)

observeEvent(input$parms, v$selected_parms <- input$parms, ignoreNULL = F)

observeEvent(input$date_range, v$selected_dates <- input$date_range, ignoreNULL = T)

class_list <- reactive({

shiny::validate(need(input$auth, "connect"))

isolate({

json_parameter_classes = rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/list/classes?email={input$email}&key={input$key}"

),

encode = "json"

)$content)

classes = if (!str_detect(json_parameter_classes, '\\"status\\": \\"Success\\"'))

NULL else

fromJSON(json_parameter_classes, simplifyDataFrame = T)$Data %>%

setDT()

})

})

parameter_list <- reactive({

shiny::validate(need(class_list(), label = "class_list"),

need(v$selected_classes, label = "parameter_class"))

if(is.null(v$selected_classes)) return()

check <<- v$selected_classes

isolate({

json_parameters = rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/list/parametersByClass?email={input$email}&key={input$key}&pc={v$selected_classes}"

),

encode = "json"

)$content)

params <- if (!str_detect(json_parameters, '\\"status\\": \\"Success\\"'))

NULL else

fromJSON(json_parameters, simplifyDataFrame = T)$Data %>%

setDT()

})

})

state_calls <- reactive({

shiny::validate(

need(v$selected_states, label = "State(s)"),

need(v$selected_parms, label = "Parameter(s)"),

need(v$selected_dates[[1]], label = "Start date"),

need(v$selected_dates[[2]], label = "End date")

)

years <- year(v$selected_dates[[1]]) : year(v$selected_dates[[2]])

state_call_table <<- expand_grid(

parm = v$selected_parms,

tibble(

bdate = pmax(v$selected_dates[[1]], ymd(paste(years, "0101"))),

edate = pmin(v$selected_dates[[2]], ymd(paste(years, "1231")))

) %>% mutate_all(~str_replace_all(., "-", "")),

state = v$selected_states

) %>% setDT()

})

monitor_list <- reactive({

next_map <- if(is.null(input$next_map)) 0 else input$next_map

get_data <- if(is.null(input$get_data)) 0 else input$get_data

if(!(next_map | get_data)) return()

isolate({

shiny::validate(need(state_calls(), label = "state_calls"))

outcomes <- state_calls()[, .(success = {

json_text <<- rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/monitors/byState?email={input$email}&key={input$key}¶m={.BY[[1]]}&bdate={.BY[[2]]}&edate={.BY[[3]]}&state={.BY[[4]]}"

),

encode = "json"

)$content)

}), by = .(parm, bdate, edate, state)]

monitors <<- purrr::map(outcomes$success, ~ if (str_detect(.x, '\\"status\\": \\"Success\\"'))

fromJSON(.x)$Data else

NULL) %>%

rbindlist(fill = T) %>%

unique() %>%

setkey()

monitors[, siteid := paste(state_code, county_code, site_number, sep = "-")]

})

})

site_list <-reactive({

shiny::validate(need(monitor_list(), "monitor_list"))

monitor_list()[, .SD[1], by = siteid]

})

observeEvent(list(input$get_data, input$get_data2, input$get_data3), {

shiny::validate(need(input$service, label = "service"),

need(state_calls(), label = "state_calls"),

need(v$selected_parms, label = "Parameter(s)"),

need(v$selected_dates[[1]], label = "Start date"),

need(v$selected_dates[[2]], label = "End date"),

need(site_list(), label = "site_list")

)

if(v$geo_select == "State") {

outcomes <- state_calls()[, .(success = {

json_text <<- rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/{input$service}/by{v$geo_select}?email={input$email}&key={input$key}¶m={.BY[[1]]}&bdate={.BY[[2]]}&edate={.BY[[3]]}&state={.BY[[4]]}"

),

encode = "json"

)$content)

}

), by = .(parm, bdate, edate, state)]

}

if(v$geo_select == "County") {

shiny::validate(need(v$selected_cntys, label = "Counties"))

years <- year(v$selected_dates[[1]]) : year(v$selected_dates[[2]])

cnty_call_table <<- expand_grid(

parm = v$selected_parms,

tibble(

state = str_sub(v$selected_cntys, 1, 2),

county = str_sub(v$selected_cntys, 3, 5)

),

tibble(

bdate = pmax(v$selected_dates[[1]], ymd(paste(years, "0101"))),

edate = pmin(v$selected_dates[[2]], ymd(paste(years, "1231")))

) %>%

mutate_all(~str_remove_all(., "-"))

) %>%

setDT()

outcomes <- cnty_call_table[, .(success = {

json_text <<- rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/{input$service}/by{v$geo_select}?email={input$email}&key={input$key}¶m={.BY[[1]]}&bdate={.BY[[2]]}&edate={.BY[[3]]}&state={.BY[[4]]}&county={.BY[[5]]}&site={.BY[[6]]}"

),

encode = "json"

)$content)

}

), by = .(parm, bdate, edate, state, county)]

}

if(v$geo_select == "Site") {

shiny::validate(need(monitor_list(), label = "monitor_list"),

need(v$selected_sites, label = "Site(s)")

)

years <- year(v$selected_dates[[1]]) : year(v$selected_dates[[2]])

site_call_table <<- expand_grid(

distinct(monitor_list()[siteid %in% v$selected_sites, .(

parm = parameter_code,

state = state_code,

county = county_code,

site = site_number)]),

tibble(

bdate = pmax(v$selected_dates[[1]], ymd(paste(years, "0101"))),

edate = pmin(v$selected_dates[[2]], ymd(paste(years, "1231")))

) %>%

mutate_all(~str_replace_all(., "-", ""))

) %>%

setDT()

outcomes <- site_call_table[, .(success = {

json_text <<- rawToChar(GET(

glue(

"https://aqs.epa.gov/data/api/{input$service}/by{v$geo_select}?email={input$email}&key={input$key}¶m={.BY[[1]]}&bdate={.BY[[2]]}&edate={.BY[[3]]}&state={.BY[[4]]}&county={.BY[[5]]}&site={.BY[[6]]}"

),

encode = "json"

)$content)

}

), by = .(parm, bdate, edate, state, county, site)]

}

raw_data <<- purrr::map(outcomes$success, ~ if (str_detect(.x, '\\"status\\": \\"Success\\"'))

fromJSON(.x)$Data else

NULL

) %>%

rbindlist(fill = T) %>%

setkey()

req(!is_empty(raw_data))

v$data <- left_join(raw_data, dplyr::select(monitor_list(), -c(latitude, longitude, datum, cbsa_code, siteid)),

by = c("state_code", "county_code", "site_number", "parameter_code", "poc"))

purrr::map(3:5, ~hide(selector = glue("#navBar li a[data-value=panel{.x}]")))

shinyjs::show(selector = "#navBar li a[data-value=panel6]")

updateNavbarPage(session, "navBar", selected="panel6")

})

observe({

purrr::map(2:6, ~hide(selector = glue("#navBar li a[data-value=panel{.x}]")))

#hide(selector = "#navBar li a[data-value=panel2]")

hide(selector = "#navBar")

toggle("by_state", condition = !is.null(state_list()))

toggle("by_county", condition = !is.null(state_list()))

toggle("by_site", condition = !is.null(state_list()))

toggle("states", condition = !is.null(state_list()))

toggle("parm_class", condition = !is.null(class_list()))

toggle("parms", condition = !is.null(parameter_list()))

toggle("service", condition = !is.null(state_list()))

})

observeEvent(v$geo_select, {

toggle("get_data", condition = v$geo_select == "State")

#if(v$geo_select %in% c("County", "Site")) removeUI("div:has(> #get_data)")

toggle("select_cnty", condition = v$geo_select == "County")

toggle("next_map", condition = v$geo_select == "Site")

})

observeEvent(list(class_list(), input$by_state, input$by_county, input$by_site), {

shiny::validate(need(class_list(), "class_list"))

updatePickerInput(session = session, inputId = "parm_class",

choices = class_list()[, code %>% setNames(paste0(code, ": ", value_represented))],

selected = v$selected_classes

)

})

observeEvent(list(parameter_list(), input$by_state, input$by_county, input$by_site), {

shiny::validate(need(parameter_list(), "parameter_list"))

updatePickerInput(session = session, inputId = "parms",

choices = parameter_list()[, code %>% setNames(paste0(code, ": ", value_represented))],

selected = v$selected_parms

)

}, priority = -1)

output$state_map <- renderLeaflet({

list(input$by_state, input$by_county, input$by_site)

isolate({

map_us <- leaflet(state_data()) %>%

addTiles() %>%

addMapPane("base_states", zIndex = 380) %>%

addMapPane("selected_states", zIndex = 390) %>%

addPolygons(

fillColor = "grey",

color = "black",

label = ~state_data()$NAME,

layerId = ~state_data()$STATEFP,

options = pathOptions(pane = "base_states")

) %>%

setView(-98.5795, 39.8282, zoom=4) %>%

addDrawToolbar(

targetGroup='Selected',

polylineOptions = FALSE,

markerOptions = FALSE,

circleMarkerOptions = FALSE,

polygonOptions = drawPolygonOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

rectangleOptions = drawRectangleOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

circleOptions = drawCircleOptions(shapeOptions = drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

editOptions = editToolbarOptions(edit = FALSE, selectedPathOptions = selectedPathOptions()))

#if(length(v$selected_states) < 1) return()

selected <- subset(state_data(), STATEFP %in% v$selected_states)

if(nrow(selected) > 0) {

map_us <- addPolygons(

map_us,

data = selected,

fillColor = "blue",

color = "black",

label = ~selected$NAME,

layerId = ~paste(selected$STATEFP, "selected"),

options = pathOptions(pane = "selected_states")

)

}

map_us

})

})

output$cnty_map <- renderLeaflet({

input$select_cnty

leaflet(cnty_data()) %>%

addTiles() %>%

addMapPane("base_cntys", zIndex = 380) %>%

addMapPane("selected_cntys", zIndex = 390) %>%

addPolygons(

fillColor = "grey",

color = "black",

label = ~cnty_data()$NAME,

layerId = ~cnty_data()$GEOID,

options = pathOptions(pane = "base_cntys")

) %>%

addDrawToolbar(

targetGroup='Selected',

polylineOptions = FALSE,

markerOptions = FALSE,

circleMarkerOptions = FALSE,

polygonOptions = drawPolygonOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

rectangleOptions = drawRectangleOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

circleOptions = drawCircleOptions(shapeOptions = drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

editOptions = editToolbarOptions(edit = FALSE, selectedPathOptions = selectedPathOptions()))

})

output$site_map <- renderLeaflet({

leaflet(site_list()) %>%

addTiles() %>%

addMapPane("base_sites", zIndex = 380) %>%

addMapPane("selected_sites", zIndex = 390) %>%

addCircleMarkers(fillColor = "grey",

color = "black",

label = ~glue("<p style='font-size:18px'>{local_site_name} ({state_code}-{county_code}-{site_number})<br><br>

{city_name}, {state_name}</p>") %>% purrr::map(HTML),

layerId = ~siteid,

options = pathOptions(pane = "base_sites")

) %>%

addDrawToolbar(

targetGroup='Selected',

polylineOptions = FALSE,

markerOptions = FALSE,

circleMarkerOptions = FALSE,

polygonOptions = drawPolygonOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

rectangleOptions = drawRectangleOptions(shapeOptions=drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

circleOptions = drawCircleOptions(shapeOptions = drawShapeOptions(fillOpacity = 0

,color = 'black'

,weight = 3)),

editOptions = editToolbarOptions(edit = FALSE, selectedPathOptions = selectedPathOptions()))

})

observeEvent(input$site_map_marker_click,{

clicked <- input$site_map_marker_click$id %>% str_extract("\\d{2}-\\d{3}-\\d{4}")

if(clicked %in% v$selected_sites) {

v$selected_sites <- v$selected_sites[!(v$selected_sites %in% clicked)]

} else {

v$selected_sites <- c(v$selected_sites, clicked)

}

})

observeEvent(input$site_map_draw_new_feature,{

v$site_polys <- c(v$site_polys, list(input$site_map_draw_new_feature)) %>%

set_names(purrr::map(., ~.x$properties$`_leaflet_id`))

})

observeEvent(input$site_map_draw_deleted_features,{

remove_polys <- map_int(1:length(input$site_map_draw_deleted_features$features),

~pluck(input$site_map_draw_deleted_features, "features", .x, "properties", "_leaflet_id"))

v$site_polys <- v$site_polys[!(names(v$site_polys) %in% as.character(remove_polys))]

})

observeEvent(v$site_polys, {

if(!length(v$site_polys)) {

v$selected_sites <- NULL

return()

}

polys <- purrr::map(v$site_polys, ~if(.x$properties$feature_type == "circle") {

.x$geometry$coordinates %>% unlist() %>%

{circle.polygon(.[1], .[2], .x$properties$radius / 1000, sides = 1000, by.length = F,

units = "km", poly.type = "gc.earth")} %>%

Polygon()

} else {

.x$geometry$coordinates[[1]] %>% rbindlist() %>% Polygon()

})

polys <- polys %>% imap(~Polygons(list(.x), .y)) %>% SpatialPolygons() %>% st_as_sf()

st_crs(polys) <- "WGS84"

site_data <- group_by(site_data1, siteid) %>% group_modify(~st_as_sf(.x, coords = c("longitude", "latitude"), crs = .x$datum) %>%

st_transform("WGS84")) %>%

rename(GEOID = siteid) %>% st_as_sf()

v$selected_sites <- st_intersection(site_data, polys) %>%

pull(GEOID)

check5 <- v$selected_sites

})

observeEvent(v$selected_sites, {

shiny::validate(need(site_list(), "site_list"))

check5 <- v$selected_sites

selected_sites <<- filter(site_list(), siteid %in% v$selected_sites)

not_selected_sites <<- filter(site_list(), !(siteid %in% v$selected_sites))

proxy <- leafletProxy("site_map", session)

if(nrow(selected_sites) > 0) {proxy %>%

addCircleMarkers(

data = selected_sites,

fillColor = "blue",

color = "black",

layerId = ~paste(siteid, "selected")

)

}

proxy %>% removeMarker(layerId = paste(not_selected_sites$siteid, "selected"))

updatePickerInput(session = session, inputId = "site_select",

choices = site_list()$siteid %>% setNames(paste0(site_list()$siteid, ": ", site_list()$local_site_name)),

selected = v$selected_sites)

}, ignoreNULL = F)

observeEvent(purrr::map(1:5, ~input[[paste0("go_back", .x)]]),{

sheet <<- input$navBar

recode(input$navBar,

panel2 = "panel1",

panel3 = "panel2",

panel4 = "panel3",

panel5 = "panel3",

panel6 = if(!is_empty(v$geo_select)) recode(v$geo_select,

State = "panel3",

County = "panel4",

Site = "panel5")

) %>%

{hide(selector = glue("#navBar li a[data-value={input$navBar}]"))

shinyjs::show(selector = glue("#navBar li a[data-value={.}]"))

updateNavbarPage(session, "navBar", selected=.)}

})

output$table <- renderDT({

if(nrow(v$data) == 0) return()

check6 <<- v$data

datatable(v$data, filter = list(position = 'top', clear = FALSE))

})

output$downloadData <- downloadHandler(

filename = function() {

paste("data-", Sys.Date(), ".csv", sep="")

},

content = function(file) {

fwrite(v$data, file)

}

)

}

runApp(shinyApp(ui, server), launch.browser = T)2.1.3 Retrieving data from MPCA WAIR database

The WAIR database provides a queryable local copy of select air quality data extracted from multiple data sources. This database is managed by Margaret McCourtney. Contact Margaret to request login credentials.

See WAIR Data Dictionary for available data tables.

Use the following code to query WAIR using the R package dplyr.

################################################################################################

## This script loads the library and driver and connects to WAIR. A dplyr query extracts

## data from the database into a format specified by Cassie McMahon for calculating

## OZONE DESIGN VALUES

##

## Note: WAIR does not contain values for SamplingFrequency and MonitorProtocolID

##

## Note: dplyr does not have a command to disconnect from the database. Connection will

## terminate upon quitting R. Please do not keep (many) connections open for long periods of

## time.

################################################################################################

## Load the package

library(dplyr)

## Open a connection to the database WAIR, schema AQS ##

my_wair <- src_postgres(dbname = 'wair', host = "eiger", user = "username", password = "password",

options = "-c search_path=aqs")

## Reference a table, or two if combining, in the database (e.g. aqs.monitor & aqs.obs_value) ##

## Select columns and filter by row ##

#aqs.monitor table in WAIR ##

my_monitor <- tbl(my_wair, "monitor") %>%

select(id_mon:poc_code) %>%

filter(stateid==27 && parm_code == 44201)

#aqs.obs_value table in WAIR ##

my_obs <- tbl(my_wair, "obs_value") %>%

filter(parm_code == 44201 && between(sampldate, "2014-06-01", "2014-06-07")) %>%

select(id_mon, dur_code, unitid, method_code,

sampldate, startime, value, nulldata, qual_code)

## Combine monitor data with observations

my_mn_o3 <- inner_join(my_monitor, my_obs, type = "inner", by = c("id_mon"))

## Collect data into a dataframe or table

my_mn_o3_df <- collect(my_mn_o3) %>%

arrange(stateid, cntyid, siteid, parm_code, # Arrange combined data in specified order

poc_code, dur_code, unitid, method_code,

sampldate, startime, value, nulldata, qual_code)

##

head(my_mn_o3_df)Use the following code to query WAIR using the package RPostgrSQL.

################################################################################################

## This script loads the library and driver and connects to WAIR. A PostgrSQL query extracts

## data from the database into a format specified by Cassie McMahon for calculating

## OZONE DESIGN VALUES

##

## Please disconnect from database and unload the driver before proceeding with analysis of

## the data in your dataframe.

##

## Cassie's headings

## "State.Code", "County.Code", "Site.ID", "Parameter", "POC", "Sample.Duration", "Unit",

## "Method", "Date", "Start.Time", "Sample.Value", "NullDataCode", "SamplingFrequency",

## "MonitorProtocolID", "Qual1"

## Note: WAIR does not contain values for SamplingFrequency and MonitorProtocolID

##

################################################################################################

## call the library

library(RPostgreSQL)

## load the PostgreSQL driver

drv <- dbDriver("PostgreSQL")

## Open a connection

con <- dbConnect(drv, dbname = "wair", host = 'eiger', user = 'username', password = 'password')

#***************************** all in 1 step ***************************************************

dframe <- dbGetQuery(con,

statement = paste(

################ insert SQL here ######################

"SELECT m.stateid AS state_code,\

m.cntyid AS county_code,\

m.siteid AS site_id,\

m.parm_code AS parameter,\

m.poc_code AS poc,\

o.dur_code AS sample_duration,\

o.unitid AS unit,\

o.method_code AS method,\

o.sampldate AS date,\

o.startime AS start_time,\

o.value AS sample_value,\

o.nulldata AS nulldatacode,\

NULL AS sampling_frequency,\

NULL AS monitor_protocol_id,\

o.qual_code\

FROM aqs.monitor m \

JOIN aqs.obs_value o \

ON m.id_mon = o.id_mon \

WHERE m.stateid = '27' \

AND m.parm_code = '44201' \

AND o.sampldate BETWEEN '2014-06-01' AND '2014-06-07'\

"

########################################################

));

#***********************************************************************************************

## Closes the connection

dbDisconnect(con)

## Frees all the resources on the driver

dbUnloadDriver(drv)2.1.4 Current AQI observations

Real-time air data for the entire United States is at your finger tips. EPA’s AirNow maintains a publicly accessible folder of current air monitoring data at https://files.airnowtech.org/. Data retrieved from AirNow is preliminary and may change following quality assurance.

Sample R script

Use the following R code to grab the most recent AQI results for the entire country.

library(dplyr)

library(readr)

# Connect to AirNow data site

#https://files.airnowtech.org/

airnow_link <- paste0("https://s3-us-west-1.amazonaws.com//files.airnowtech.org/airnow/today/",

"HourlyData_",

format(Sys.time() - 60*75, "%Y%m%d%H", tz = "GMT"),

".dat")

aqi_now <- read_delim(airnow_link, "|",

col_names = F)

#col_types = c('cccciccdc'))

# Add column names

names(aqi_now) <- c("date", "time", "aqsid", "city", "local_time", "parameter", "units", "concentration", "agency")

# Filter to Ozone and PM2.5 results

aqi_now <- filter(aqi_now, parameter %in% c("OZONE", "PM2.5"))2.1.5 Retrieving data from LIMS via Tableau

The LIMS database is the primary data warehouse for AQ monitoring activities. The LIMS system is being retired and replaced. To ease data accessibilty during this transition LIMS data are available for download via an internal Tableau workbook at http://tableau.pca.state.mn.us/#/workbooks/3342

Sample R script

The following function reads LIMS data from Tableau. All inputs must be provided. The inputs are:

Sites: Integer vector representing site numbers (no state code, county code, or POC) Parameter_list: Integer vector representing parameters to extract. Start_date: Character string of date in YYYY-MM-DD format (or other format recognized by ymd()) End_date: Character string of date in YYYY-MM-DD format (or other format recognized by ymd()) Pollutant_Groups: Character vector of pollutant groups to extract. Air toxics include “metals (TSP),” “VOCs,” “carbonyls.”

read_AT_data_tableau = function(Sites, Parameter_list, Start_date = "2016-01-01", End_date = "2016-03-31", Pollutant_Groups = c("metals (TSP)", "VOCs", "carbonyls")) {

sample_calendar <- function(start = "2016-01-01",

end = "2016-12-31",

day_interval = 6,

type = "air_toxics") {

library(lubridate)

# Convert 'start' and 'end' to class date

start <- ymd(start)

end <- ymd(end)

# Set official start date to selected EPA calendar

if(type == "air_toxics") {

epa_start <- ymd("1989-12-24")

} else {

epa_start <- start

}

# Create full table of sampling dates

calendar <- seq(from = epa_start,

to = end,

by = paste(day_interval, "days"))

# Subset to user's date range

calendar <- calendar[calendar >= start & calendar <= end]

return(calendar)

}

# Generate air toxics sampling dates

Dates = sample_calendar(Start_date, End_date)

# Break dates into quarters

Quarters = quarter(Dates, with_year = T)

library(tidyverse)

# Convert fields to Tableau url format

base_url = "http://tableau.pca.state.mn.us/views/exportDailyData/Datawithnullcodes.csv?"

Sites = paste(paste0(Sites,"-1", collapse = ","), paste0(Sites,"-2", collapse = ","), sep = ",")

Testnames = Pollutant_Groups %>% url_encode() %>% paste0(collapse = ",")

Analytes = url_encode(Analytes) %>% gsub("%2c", "%5C%2C",.) %>% paste0(collapse = ",")

Parameter_list = paste0(Parameter_list, collapse = ",")

AT_data = NULL

for (i in unique(Quarters) ) {

Dates2 = paste0(Dates[Quarters == i], collapse = ",")

#Construct url for Tableau

url = paste0(base_url,

"site%20and%20poc=", Sites,

"&TESTNAME=", Testnames,

"&ANALYTES_ANALYTE=", Analytes,

"&PARAMCODE=", Parameter_list,

"&RUNDATE=", Dates2

)

# Read data from Tableau server

AT_data = bind_rows(AT_data, read_csv(url, col_types = "ccccicccd") )

}

return(AT_data)

}2.1.6 Retrieving continuous data from AirVision

AirVision is the data acquistion and temporary storage database for continuous air monitoring data. Data collected in AirVision is transfered to LIMs and AQS for final data storage. If you need the most recent monitoring observations and would like to access continuous data before it has been transfered to the final data repository you can run reports from AirVision.

To run reports, the AirVision client must be installed on your computer and you need a user account. Contact the Air Monitoring Supervisor to request credentials. Alternatively, an AirVision administator can create a report task that will generate a data report and send it to a specified location (FTP site or e-mail).

2.1.7 AirNow:Active AQI monitors

A map of MPCA’s air monitoring network is available online at Minnesota air monitoring sites.

A list of all active AQI monitoring locations around the United States are published to AirNow in the monitoring_site_locations.dat file.

Sample R script

Click the button below to view a step by step example.

library(dplyr)

library(readr)

# Connect to AirNow data site

#https://files.airnowtech.org/

airnow_link <- paste0("https://s3-us-west-1.amazonaws.com//files.airnowtech.org/airnow/today/",

"monitoring_site_locations.dat")

aqi_sites <- read_delim(airnow_link, "|", col_names = F)

# Drop empty columns

aqi_sites <- aqi_sites[ , -c(14:16,22:23)]

# Add column names

names(aqi_sites) <- c("aqsid",

"parameter",

"local_id",

"name",

"status",

"state_region",

"agency",

"epa_region",

"lat",

"long",

"elevation",

"local_time",

"country",

"city",

"state_fips",

"state",

"county_fips",

"county")

# Filter to Minnesota sites

aqi_sites <- filter(aqi_sites, state_fips %in% c(27))2.1.8 EPA Google Earth Site Maps

The AirData Air Quality Monitors app is a mapping application available on the web and on mobile devices that displays monitor locations and monitor-specific information. It also allows the querying and downloading of data daily and annual summary data. Static KMZ files are also available to download.

https://www.epa.gov/outdoor-air-quality-data/interactive-map-air-quality-monitors

2.1.9 WAIR Site information table

The WAIR database includes a copy of the EPA site information table, which includes site names and location information.

Sample R script

Click the button below to view a step by step example.

library(RPostgreSQL)

username <- #Add WAIR UserName

pass <- #Add WAIR password

## Load the PostgreSQL driver

drv <- dbDriver("PostgreSQL")

## Open a connection

con <- dbConnect(drv, dbname="wair",host='eiger',user=username,password=pass)

sites <- dbGetQuery(con,

statement = paste(

"SELECT *

FROM wair.aqs.site \

"

));

dbDisconnect(con) ## Closes the connection

dbUnloadDriver(drv) ## Frees all the resources on the driver2.1.10 Air toxics Data Explorer

View and download summarized air toxics monitoring data results from the online Air Toxics Data Explorer.

2.1.11 Criteria Pollutant Data Explorer

View and download MPCA calculated annual criteria pollutant design values from the online Criteria Pollutant Data Explorer (https://www.pca.state.mn.us/air/criteria-pollutant-data-explorer).

2.1.12 Air Quality Index Summary Reports

View and download annual AQI Summary Reports (based on final montiroing data) from the online Air Quality Index Data Explorer.

2.1.13 Air Monitoring for PAHs

View and download PAH monitoring results from the community and facility based special projects online at Air Monitoring for PAHs.

2.1.14 Internal Data Explorers

Not all data analysis projects are ready or intended for external audiences. You can find a variety of analzyed monitoring results on the internal Tableau Server.

2.2 Health and standards

2.2.1 Inhalation health benchmarks (IHBs)

The inhalation health benchmarks used by MPCA are available on the web in these formats:

- Web table: https://public.tableau.com/profile/mpca.data.services#!/vizhome/Airtoxicityvalues/Airtoxicityvalues

- Excel: https://www.pca.state.mn.us/sites/default/files/aq9-22.xlsm

- CSV: https://raw.githubusercontent.com/MPCA-air/health-values/master/Inhalation_Health_Benchmarks(IHBs).csv

Use the following R code to fetch the most recent inhalation health benchmarks and references used by MPCA.

library(readr)

url <- "https://raw.githubusercontent.com/MPCA-air/health-values/master/Inhalation_Health_Benchmarks(IHBs).csv"

ihbs <- read_csv(url) 2.2.2 Air Quality Standards (NAAQS and MAAQS)

Criteria air pollutants including: particulate (TSP, PM10, PM2.5), ozone, lead, sulfur dioxide, nitrogen dioxide, and carbon monoxide are regulated via ambient air quality standards. Ambient air quality standards are set at the national (NAAQS) and state (MAAQS) level.

NAAQS

The Clean Air Act, which was last amended in 1990, requires EPA to set National Ambient Air Quality Standards (40 CFR part 50) for pollutants considered harmful to public health and the environment. The Clean Air Act identifies two types of national ambient air quality standards. Primary standards provide public health protection, including protecting the health of “sensitive” populations such as asthmatics, children, and the elderly. Secondary standards provide public welfare protection, including protection against decreased visibility and damage to animals, crops, vegetation, and buildings.

- A summary of current NAAQS is available on EPA’s website:

- When calculating NAAQS design values, analysts must follow the procedures defined in the pollutant specific appendices to 40 CFR 50.

- In August each year, EPA posts official design values here:

MAAQS

Minnesota Ambient Air Quality Standards (MAAQS) are established in Minnesota Administrative Rules 7009.

In most cases, the MAAQS are the same as the NAAQS. The MAAQS also include standards for two pollutants that are not covered by the NAAQS: TSP and hydrogen sulfide. The MAAQS were last updated in January 2017.

When calculating MAAQS design values:

- If MAAQS = NAAQS, use methods described in appendices to 40 CFR 50.

- If MAAQS = revoked NAAQS, use methods described in archived appendices to 40 CFR 50.

- For MAAQS without a NAAQS, such as H2S, use the “Form of the standard” language to guide your calculations.

2.3 Air modeling

2.3.1 NATA modeling

The National Air Toxics Assessment is a nationwide air modeling effort to provide air concentrations and risks for all U.S. census tracts. These results may be pulled from a map project or data tables at NATA. After each inventory is completed the Minnesota statewide cumulative air pollution model is compared to these results.

2.3.2 MNRISKS statewide risk modeling

MNRISKS is the statewide cumulative air pollution model that is produced by MPCA every three years with the air toxics emissions inventory publication. The model itself produces point estimates for air concentrations and potential human health risks for hundreds of air pollutants across Minnesota. The model also incorporates a date and transport component that produces multi-pathway risk results. Census block group averaged results are available on MPCA’s Air modeling and human health webpage.

2.3.3 Downscaler modeling results for Ozone and PM2.5

Downscaled data (a blend of modeling and monitoring results) are provided by the CDC in cooperation with the EPA for the pollutants ozone and PM-2.5. Daily predicitons are available for each Census tract throughout the country for the years 2001 to 2013.

- CDC information about this platform is available online at https://ephtracking.cdc.gov/showAirData.action.

- Data from this platform can be downloaded from the EPA at https://www.epa.gov/air-research/downscaler-model-predicting-daily-air-pollution.

- Downloaded data filtered to Minnesota results is available internally in the folder:

X:\Programs\Air_Quality_Programs\Air Monitoring Data and Risks\6 Air Data\EPA Downscaler Modeling.

2.3.4 CMAQ Ozone modeling

The Community Multiscale Air Quality Modeling System (CMAQ) is defined by EPA as:

an active open-source project of the EPA that consists of a suite of programs for conducting air quality model simulations. CMAQ combines current knowledge in atmospheric science and air quality modeling, and an open-source framework to deliver fast, technically sound estimates of ozone, particulates, air toxics and acids deposition.

The information and data from this platform can be found at the following website CMAQ.

2.4 Context

2.4.1 MN Emissions Inventory

Every year all facilities (stationary point sources) report criteria pollutant emissions and every three years facilities report air toxics emissions. The EPA and the state of Minnesota emissions inventory team also calculate and report emissions for all other types of emissions sources such as mobile, non-road mobile, and area sources. These non-point emissions are reported every three years with the air toxics emissions. The Minnesota emission inventory is the foundation of MNRISKS. These emissions levels are reported in several data tools on the MPCA website. Their data and the visualization tools can be found on the following website https://www.pca.state.mn.us/air/emissions-data.

2.4.2 EPA’s NEI

The National Emissions Inventory (NEI) provides air toxics and criteria and air toxic pollutant emissions for the entire U.S. on the same schedule as described in the Minnesota Emissions Inventory section. This information can be found at the following website https://www.epa.gov/air-emissions-inventories/national-emissions-inventory-nei.

2.4.3 Facility locations

The most recent compilation of facility coordinates was performed for the 2017 emission inventory. This data is available in the INV_SOURCES table found in the RAPIDS schema of MPCA’s DELTA database.

Use the following code to load the current facility coordinates.

# This script connects to the MPCA database DELTA.

# A dplyr query collects source coordinates from the RAPIDS schema.

# Load packages

library(dplyr)

library(RODBC)

library(readr)You can view available database connections in R using the function odbcDataSources().

odbcDataSources()## PostgreSQL35W deltaw

## "PostgreSQL Unicode(x64)" "Oracle in OraClient11g_home2"

## Amazon Redshift ODBC DSN

## "Amazon Redshift (x64)"To connect to deltaw, use the function odbcConnect().

# Connect to DELTA

deltaw <- odbcConnect("deltaw",

uid = user,

pwd = password,

believeNRows = FALSE)

# Show all tables in RAPIDS schema

rapids_tbls <- sqlTables(deltaw, tableType = "TABLE", schema = "RAPIDS")

head(rapids_tbls)## TABLE_CAT TABLE_SCHEM TABLE_NAME TABLE_TYPE REMARKS

## 1 <NA> RAPIDS GEO_ACTIVITIES TABLE <NA>

## 2 <NA> RAPIDS GEO_COUNTIES TABLE <NA>

## 3 <NA> RAPIDS GEO_NATIONS TABLE <NA>

## 4 <NA> RAPIDS GEO_REGIONS TABLE <NA>

## 5 <NA> RAPIDS GEO_REGION_MEMBERS TABLE <NA>

## 6 <NA> RAPIDS GEO_STATES TABLE <NA># Get inventory year codes

inv_codes <- sqlQuery(deltaw, "SELECT * FROM RAPIDS.INV_INVENTORIES", max = 100, stringsAsFactors = F)

# Get code for 2017

inv_id <- filter(inv_codes, INVENTORY_YEAR == 2017)$RID

# Get sources for inventory year

sources <- sqlQuery(deltaw, paste0("SELECT * FROM RAPIDS.INV_SOURCES WHERE INVENTORY_RID = ", inv_id),

max = 10000,

stringsAsFactors = F,

as.is = T)

# Get source coordinates

src_coords <- sqlQuery(deltaw, "SELECT * FROM RAPIDS.INV_COORDINATES",

max = 100000,

stringsAsFactors = F,

as.is = T)

# Join coordinates to sources

sources <- left_join(sources, src_coords, by = c("RID" = "ENTITY_RID"))

# View data

sources %>% select(SOURCE_ID, SOURCE_TYPE, SOURCE_NAME, LONGITUDE, LATITUDE) %>% glimpse()## Rows: 9,426

## Columns: 5

## $ SOURCE_ID <chr> "2799000257", "2703700102", "2706900014", "2708900012", "2~

## $ SOURCE_TYPE <chr> "POINT", "POINT", "POINT", "POINT", "POINT", "POINT", "POI~

## $ SOURCE_NAME <chr> "Gravel Products Inc", "Great Lakes Coca-Cola Distribution~

## $ LONGITUDE <chr> NA, "-93.153", "-97.0603", "-96.2385", "-95.2051", "-93.38~

## $ LATITUDE <chr> NA, "44.8567", "48.9907", "48.2558", "47.5853", "45.111953~2.4.4 Weather observations

Historical meteorological observations are available from multiple sources.

Raw data

- An internal Tableau workbook provides raw results for Minnesota meteorological stations: tableau.pca.state.mn.us/#/workbooks/5714.

Quality assured data

- The AQI forecast uses a web API provided by DarkSky for current forecast information and quality assured historical observations.

2.4.5 HYSPLIT wind trajectories

Wind trajectories are useful for determining the primary sources contributing to elevated air monitoring results. Trajectory results for the air monitoring netowork are available in WAIR for the years 2007 to 2017. The R package SplitR was used to automate HYSPLIT modeling for each air monitoring location.

Use the following code to query WAIR for HYSPLIT results.

# This script connects to the MPCA database WAIR.

# A dplyr query collects HYSPLIT modeling results for Anoka Airport.

# Load packages

library(dplyr)

# Open a connection to the database WAIR, schema hys ##

my_wair <- src_postgres(dbname = 'wair', host = "eiger", user = "username", password = "password")

# Show tables

src_tbls(my_wair) %>% sort()

# Connect to hys.backtrajectory table ##

hys <- tbl(my_wair, "hys.backtrajectory")

hys <- tbl(my_wair, sql('hys.backtrajectory'))

# Collect data for Anoka Airport after year 2010 ##

hys_mpls <- hys %>%

select(-the_geom) %>%

filter(site_catid == "27-003-1002", yr > 10) %>%

collect(n = 2000)

# View data

head(hys_mpls)2.4.6 Land use maps

Land use shapefiles are maintained by MPCA GIS technical staff and stored on the agency R-drive at R:\landuse_landcover.

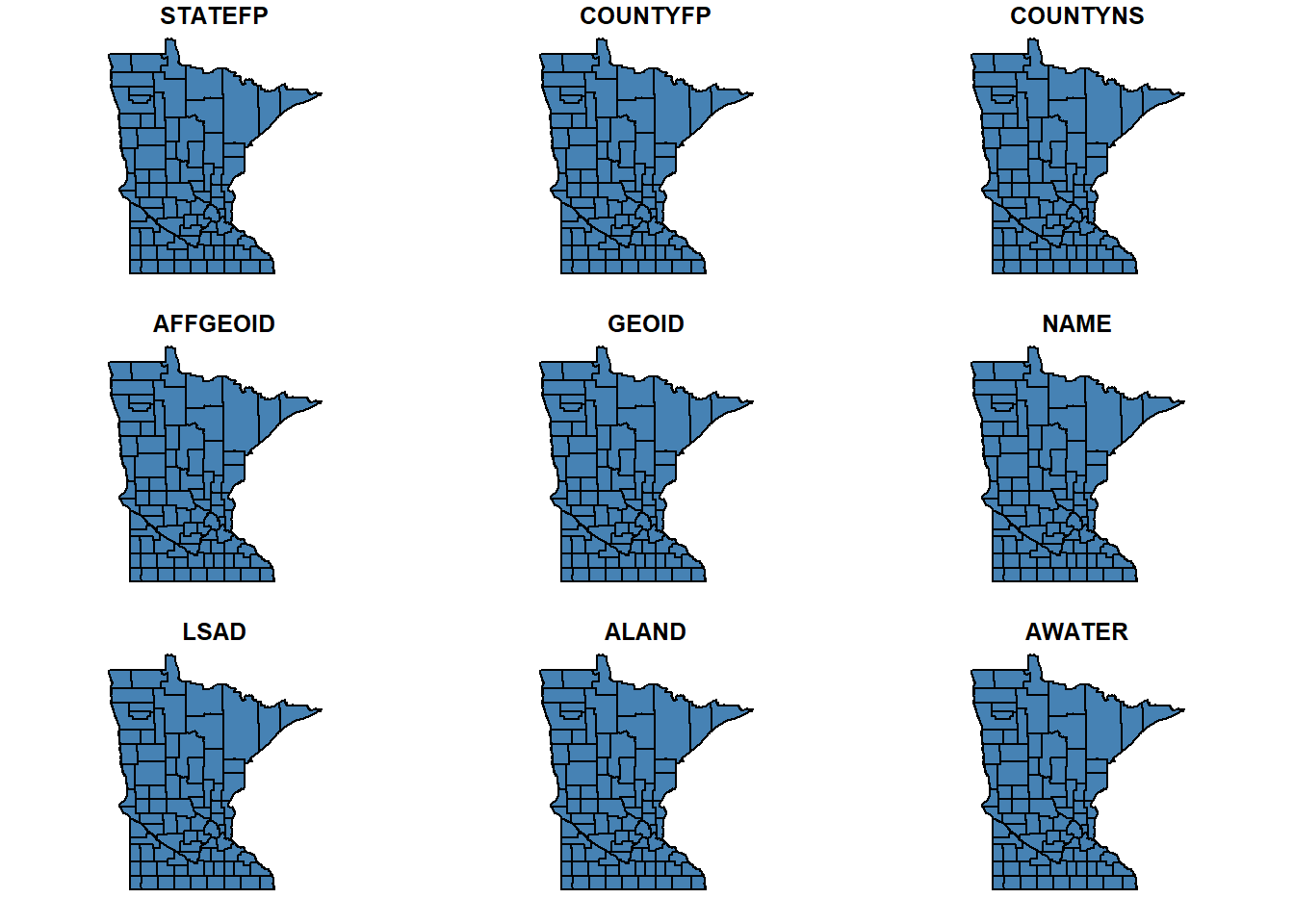

2.4.7 United States Census boundaries

Census boundaries can be loaded into R for mapping air data to Census tracts and block groups.

Use the following code to download and map MN boundaries.

# This script downloads shapefiles of Minnesota

## Counties

## Census tracts

## Census Block groups

# Load packages

library(tigris)

# Load boundaries

county_bounds <- counties(state = "MN", cb = T)# Plot

plot(county_bounds, col = "steelblue")

Tracts & Block groups

tract_bounds <- tracts(state = "MN", cb = T)

bg_bounds <- block_groups(state = "MN", cb = T)2.4.8 American Community Survey (ACS)

The ACS provides updated demographic statistics used for population estimates and Environmental Justice indicators.

Use the following code to download Minnesota ACS results.

# This script downloads American Community Survey (ACS) results for MN

# Load packages

library(tidycensus)

# ACS data requires a Census key

# Visit: http://api.census.gov/data/key_signup.html

census_api_key("Your_API_key")

# View all ACS variables

acs_variables <- load_variables(2015, "acs5", cache = TRUE)

# Download 5-yr population estimates for 2015

pops_2015 <- get_acs(geography = "tract",

state = "MN",

variables = "B01003_001",

survey = "acs5",

year = 2015)

# Download decennial population estimates for 2010

pops_2010 <- get_decennial(geography = "tract",

state = "MN",

variables = "P0080001",

year = 2010)

# Download household median income for 2015

med_inc_2015 <- get_acs(geography = "tract",

state = "MN",

variables = "B19013_001",

survey = "acs5",

year = 2015)