R Training | Day 3

Welcome back Jedis!

Open your RStudio project

- Open your project folder from last week

- Double click the .Rproj file to open RStudio

Open a New script

File > New File > R Script

- Click the floppy disk save icon

Give it a name:

03_day.Rwill work well

Schedule

- Review

- Working with dates - Endor Storm Chasing

- The magic pipe

%>% - Where’s Finn?

- Join 2 tables:

left_jointo the rescue - Calculate summary stats for your entire table

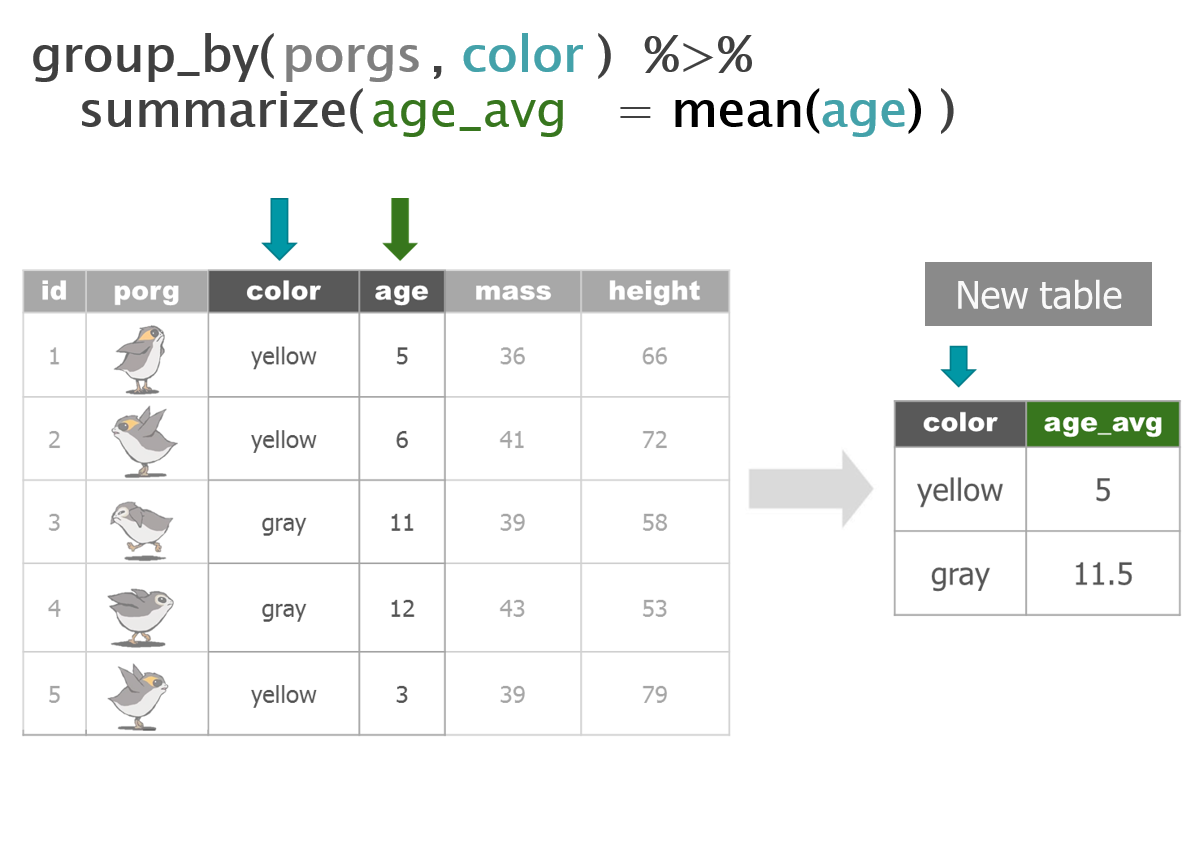

group_by: Calculate stats for each group and category (for each monitoring site, each pollutant, each year, or every thingymajig in your data)- Save & Export data

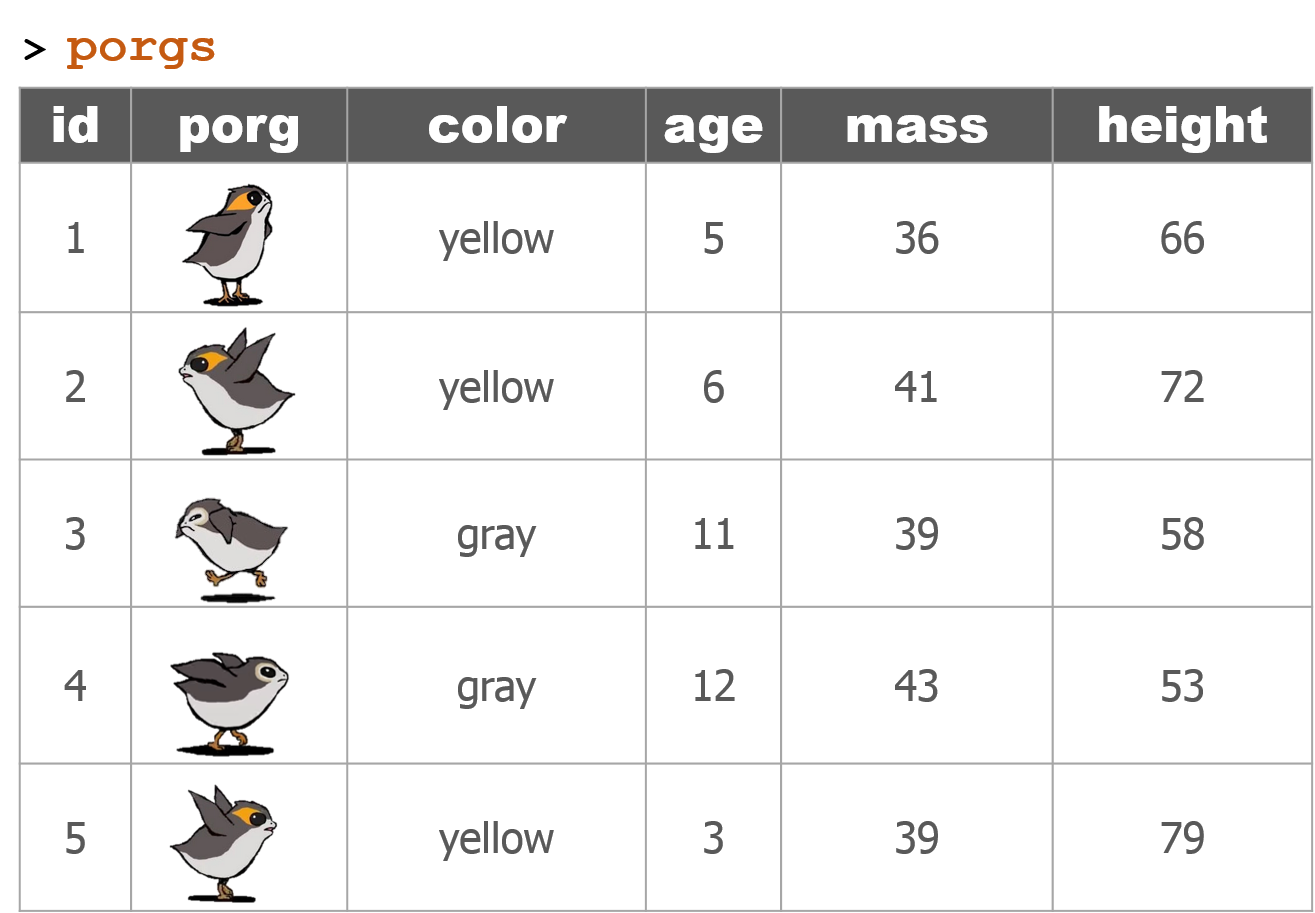

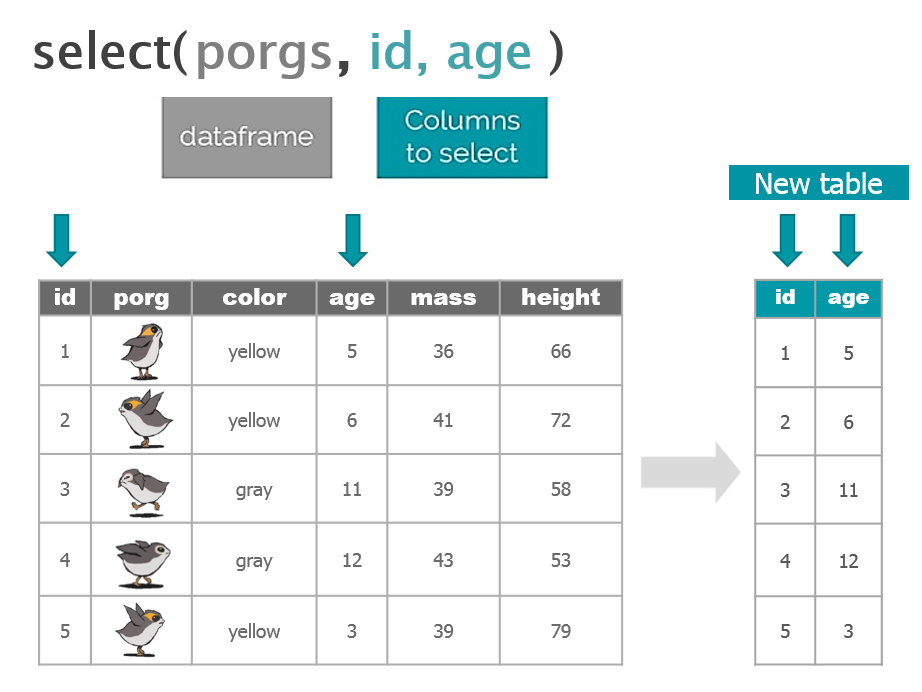

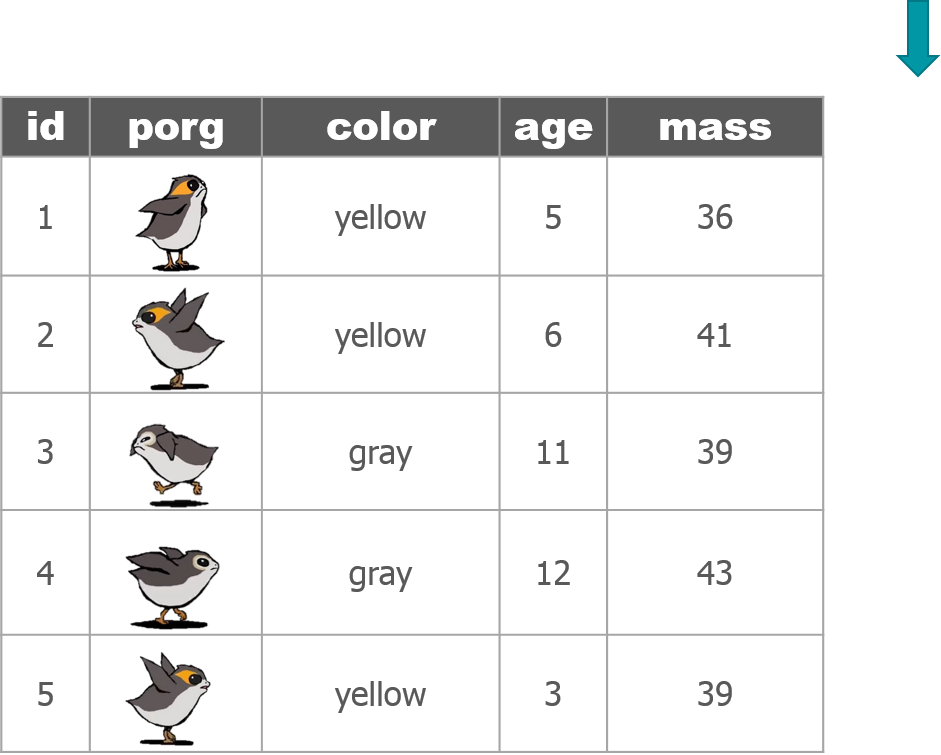

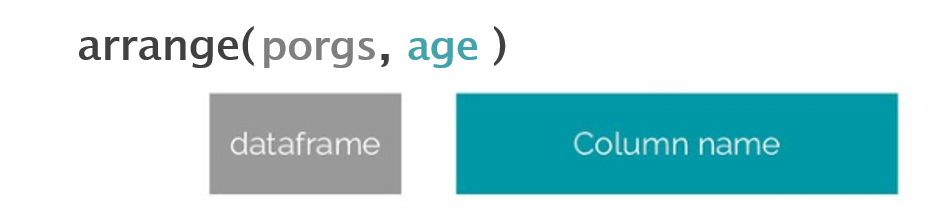

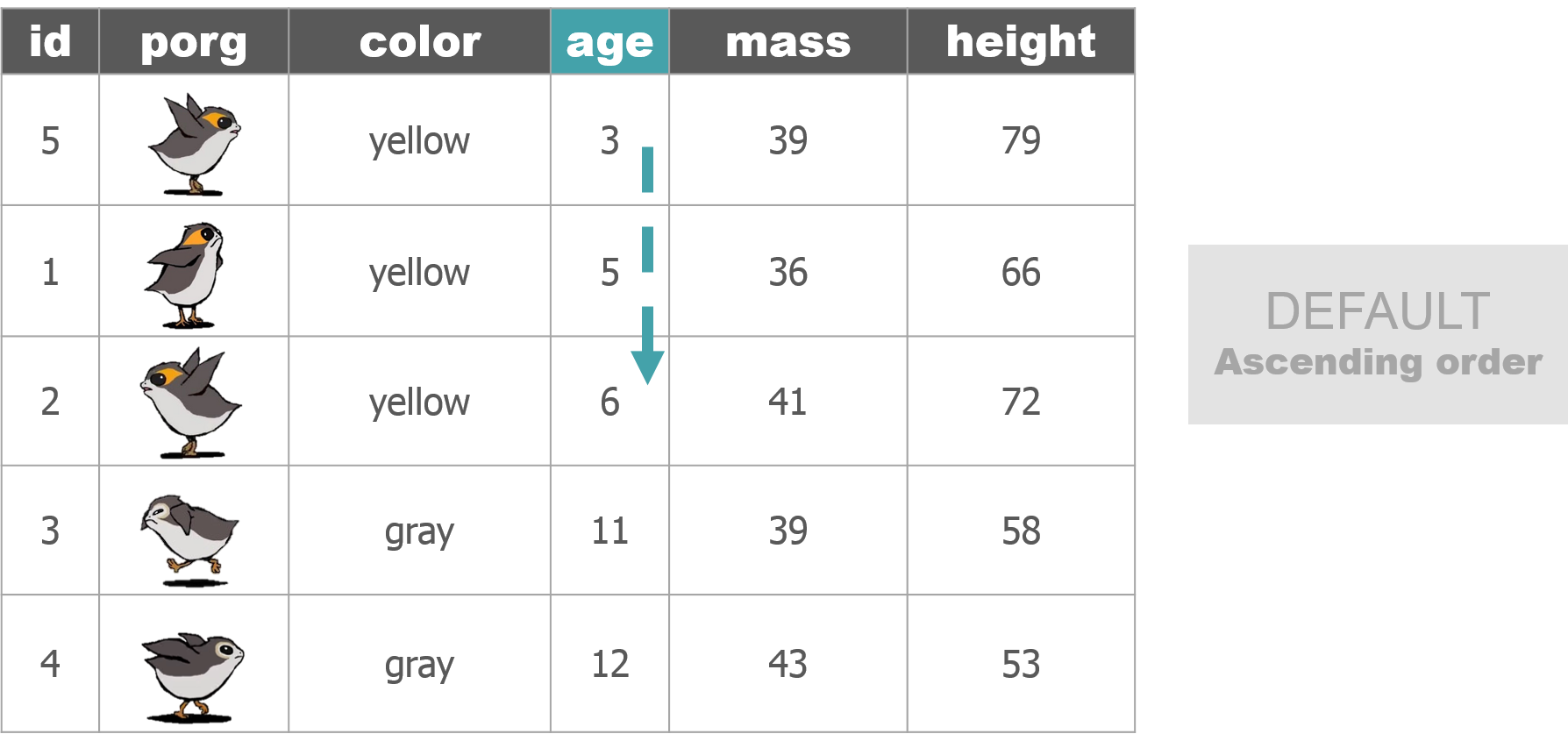

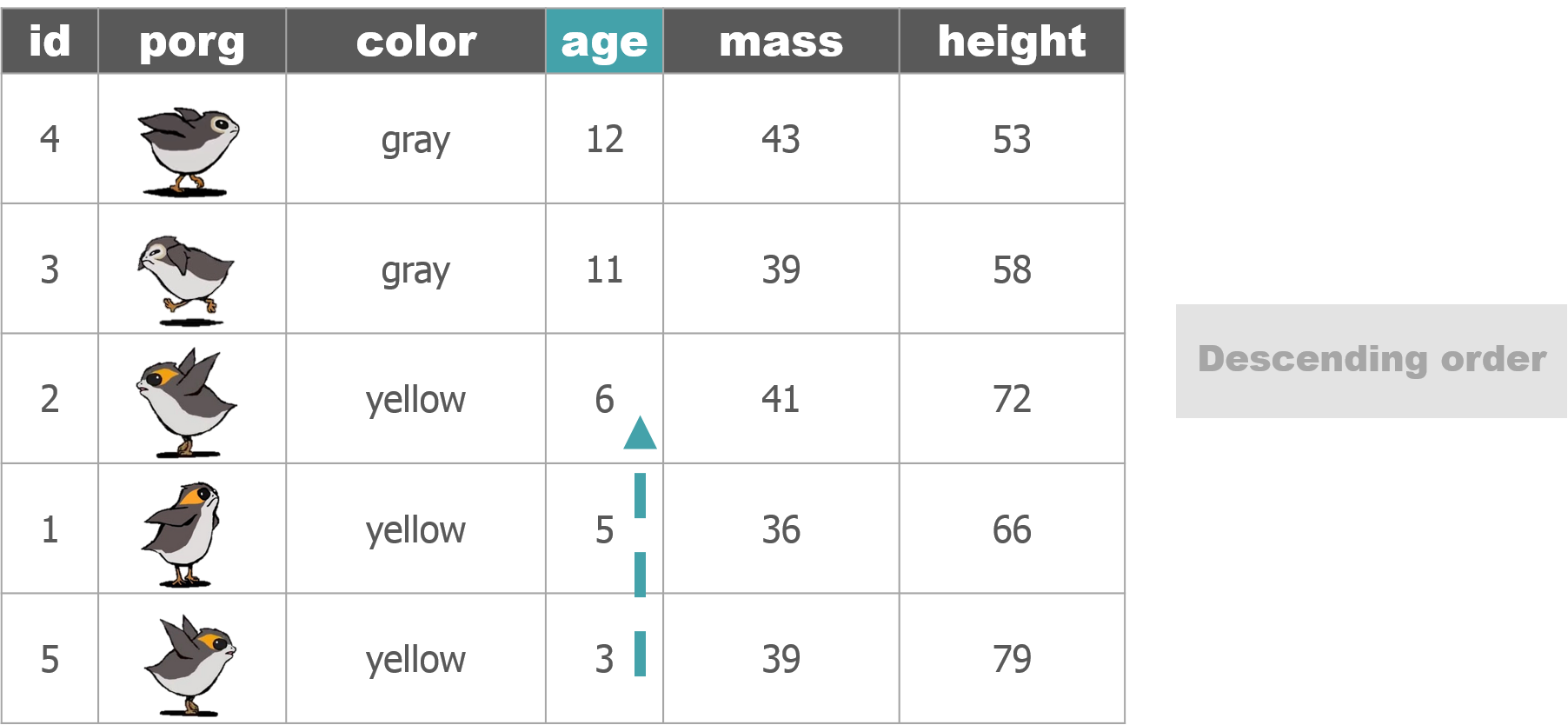

Porg review

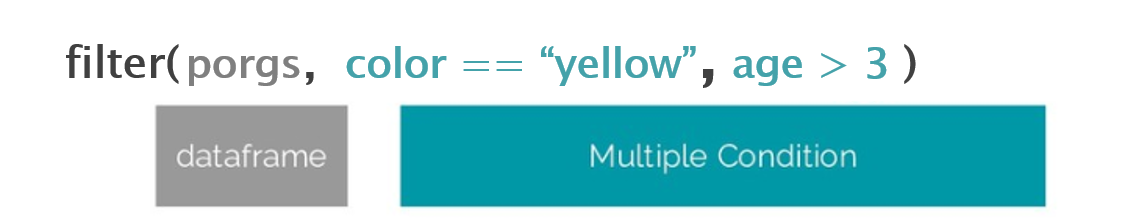

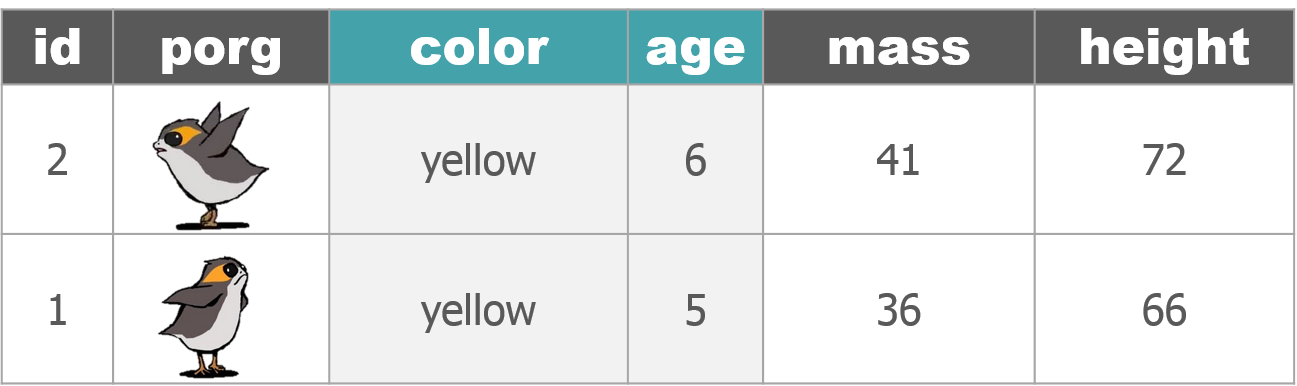

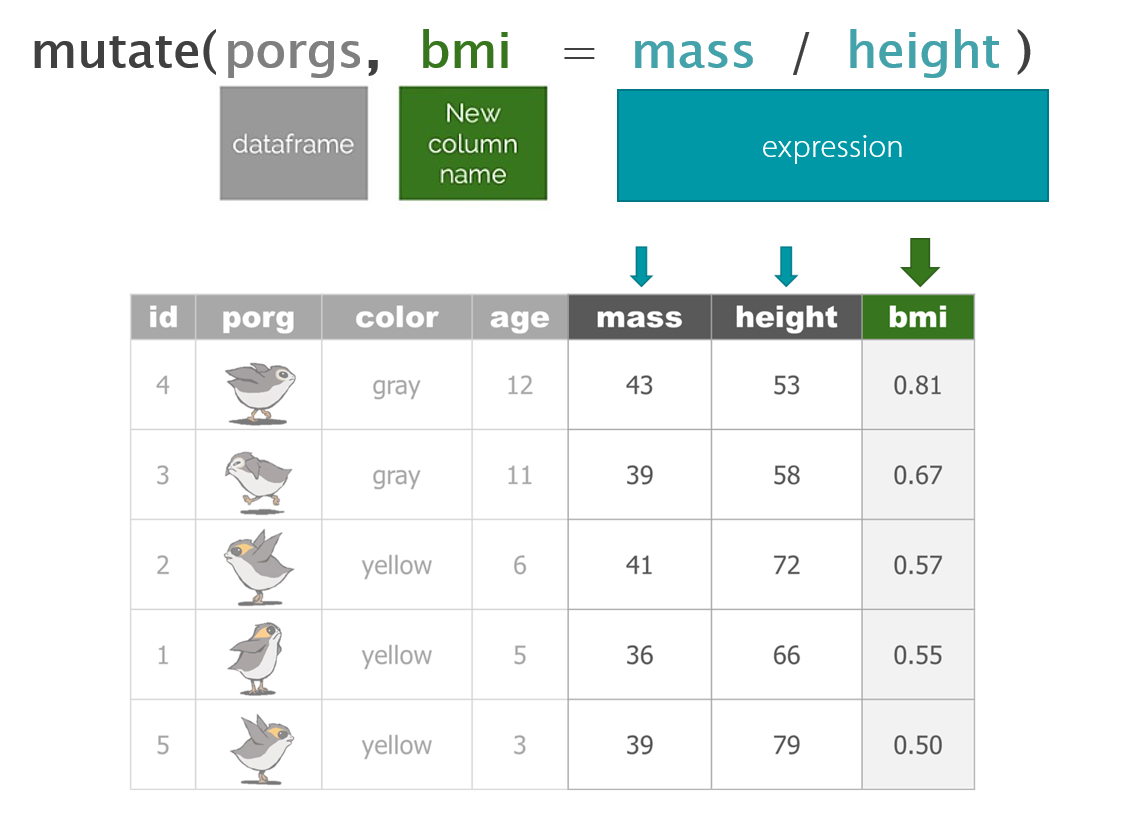

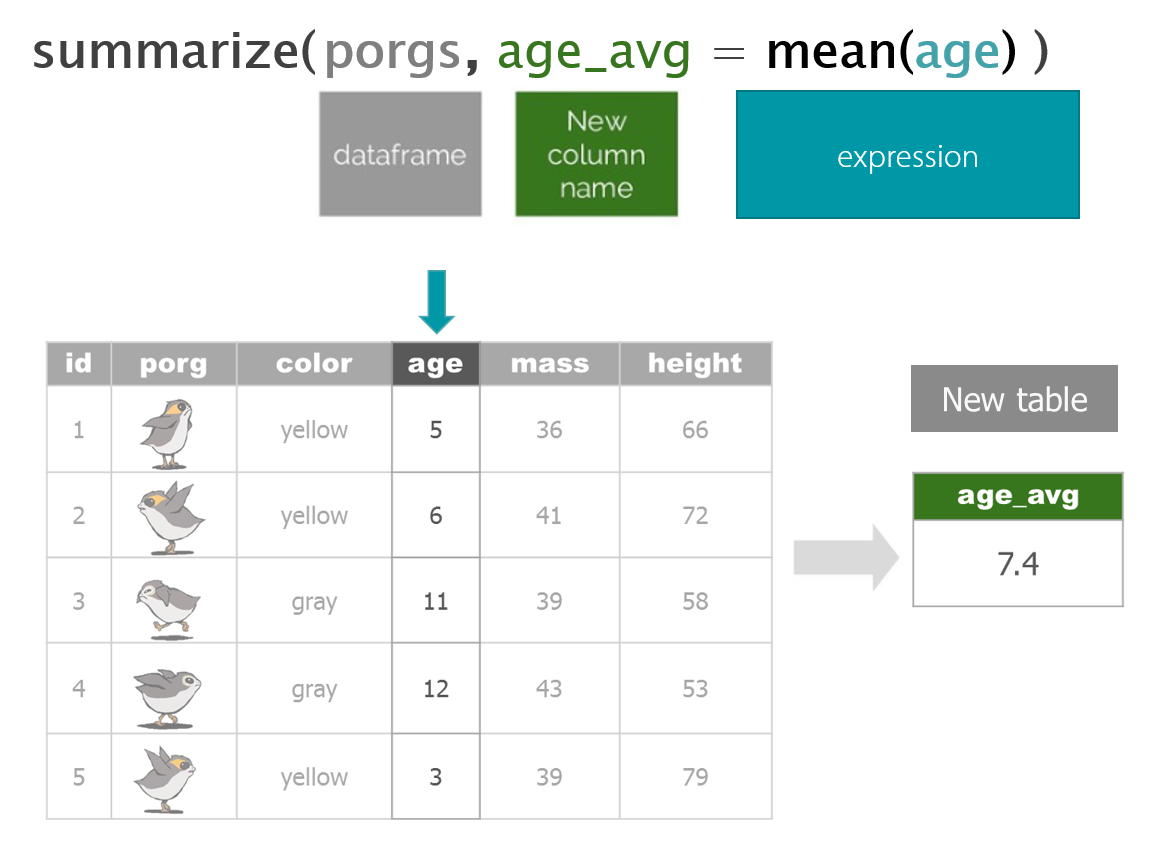

The poggle of porgs has returned to help us review the dplyr functions. Follow along by downloading the porg data from the URL below.

library(readr)

porgs <- read_csv("https://itep-r.netlify.com/data/porg_data.csv")

New mission!

BB8 received new data from the wookies suggesting there was a large magnetic storm just before Site 1 burned down on Endor. Sounds like we’re going to be STORM CHASERS!

Get the dataset

While we’re relaxing and flying to Endor let’s get set up with our new data.

Endor Setup

- Download the data.

- DOWNLOAD - Get the Endor monitoring data

- Create a new folder called

datain your project folder. - Move the downloaded Endor file to there.

- Create a new R script called

endor.R

Now we can read in the data from the data folder with our favorite read_csv function.

library(readr)

library(dplyr)

library(janitor)

air_endor <- read_csv("data/air_endor.csv")

names(air_endor)

# Clean the column names

air_endor <- clean_names(air_endor)

names(air_endor)## [1] "analyte" "lab_code" "units" "start_run_date"

## [5] "site_name" "long_site_name" "planet" "result"Note

When your project is open, RStudio sets the working directory to your project folder. When reading files from a folder outside of your project, you’ll need to use the full file path location.

For example, for a file on the X-drive: X://Programs/Air_Quality/Ozone_data.csv

Welcome to Endor!

Let’s get acquainted with our new Endor data set.

Remember the different ways?

Hint:

summary(),glimpse(),nrow(),distinct()

glimpse(air_endor)## Observations: 1,190

## Variables: 8

## $ analyte <chr> "1-Methylnaphthalene", "1-Methylnaphthalene", "...

## $ lab_code <chr> "HV-403", "HV-408", "HV-411", "HV-415", "HV-418...

## $ units <chr> "ng/m3", "ng/m3", "ng/m3", "ng/m3", "ng/m3", "n...

## $ start_run_date <chr> "7/11/2016", "7/23/2016", "8/5/2016", "8/16/201...

## $ site_name <chr> "battlesite1", "battlesite1", "battlesite1", "b...

## $ long_site_name <chr> "Endor_Tana_forestmoon_battlesite1", "Endor_Tan...

## $ planet <chr> "Endor", "Endor", "Endor", "Endor", "Endor", "E...

## $ result <dbl> 0.00291, 0.00000, 0.00000, 0.00608, 0.00000, 0....distinct(air_endor, analyte)## # A tibble: 27 x 1

## analyte

## <chr>

## 1 1-Methylnaphthalene

## 2 2-Methylnaphthalene

## 3 5-Methylchrysene

## 4 Acenaphthene

## 5 Anthanthrene

## 6 Benz[a]anthracene

## 7 Benzo[a]pyrene

## 8 Benzo[c]fluorene

## 9 Benzo[e]pyrene

## 10 Benzo[g,h,i]perylene

## # ... with 17 more rowsWoah. There are definitely more analytes than we need. We only want to know about magnetic_field data. Let’s filter down to only that analyte.

mag_endor <- filter(air_endor, analyte == "magnetic_field")Boom! How many rows are left now?

How do we filter on the date column?

With time series data it’s helpful for R to know which columns are date columns. Dates come in many formats and the standard format in R is 2019-01-15, also referred to as Year-Month-Day format.

We use the lubridate package to help format our dates.

1 | Dates

The lubridate package

It’s about time! Lubridate makes working with dates much easier.

We can find how much time has elapsed, add or subtract days, and find seasonal and day of the week averages.

Convert text to a DATE

| Function | Order of date elements |

|---|---|

mdy() |

Month-Day-Year :: 05-18-2019 or 05/18/2019 |

dmy() |

Day-Month-Year (Euro dates) :: 18-05-2019 or 18/05/2019 |

ymd() |

Year-Month-Day (science dates) :: 2019-05-18 or 2019/05/18 |

ymd_hm() |

Year-Month-Day Hour:Minutes :: 2019-05-18 8:35 AM |

ymd_hms() |

Year-Month-Day Hour:Minutes:Seconds :: 2019-05-18 8:35:22 AM |

Get date parts

| Function | Date element |

|---|---|

year() |

Year |

month() |

Month as 1,2,3; For Jan, Feb, Mar use label=TRUE |

day() |

Day of the month |

wday() |

Day of the week as 1,2,3; For Sun, Mon, Tue use label=TRUE |

| - Time - | |

hour() |

Hour of the day (24hr) |

minute() |

Minutes |

second() |

Seconds |

tz() |

Time zone |

Install lubridate

First, type or copy and paste install.packages("lubridate") into RStudio.

Clean the dates

Let’s use the mdy() function to turn the start_run_date column into a nicely formatted date.

library(lubridate)

mag_endor <- mutate(mag_endor, date = mdy(start_run_date),

year = year(date))According to the request we received, the Resistance is only interested in observations from the year 2017. So let’s filter the data down to only dates within that year.

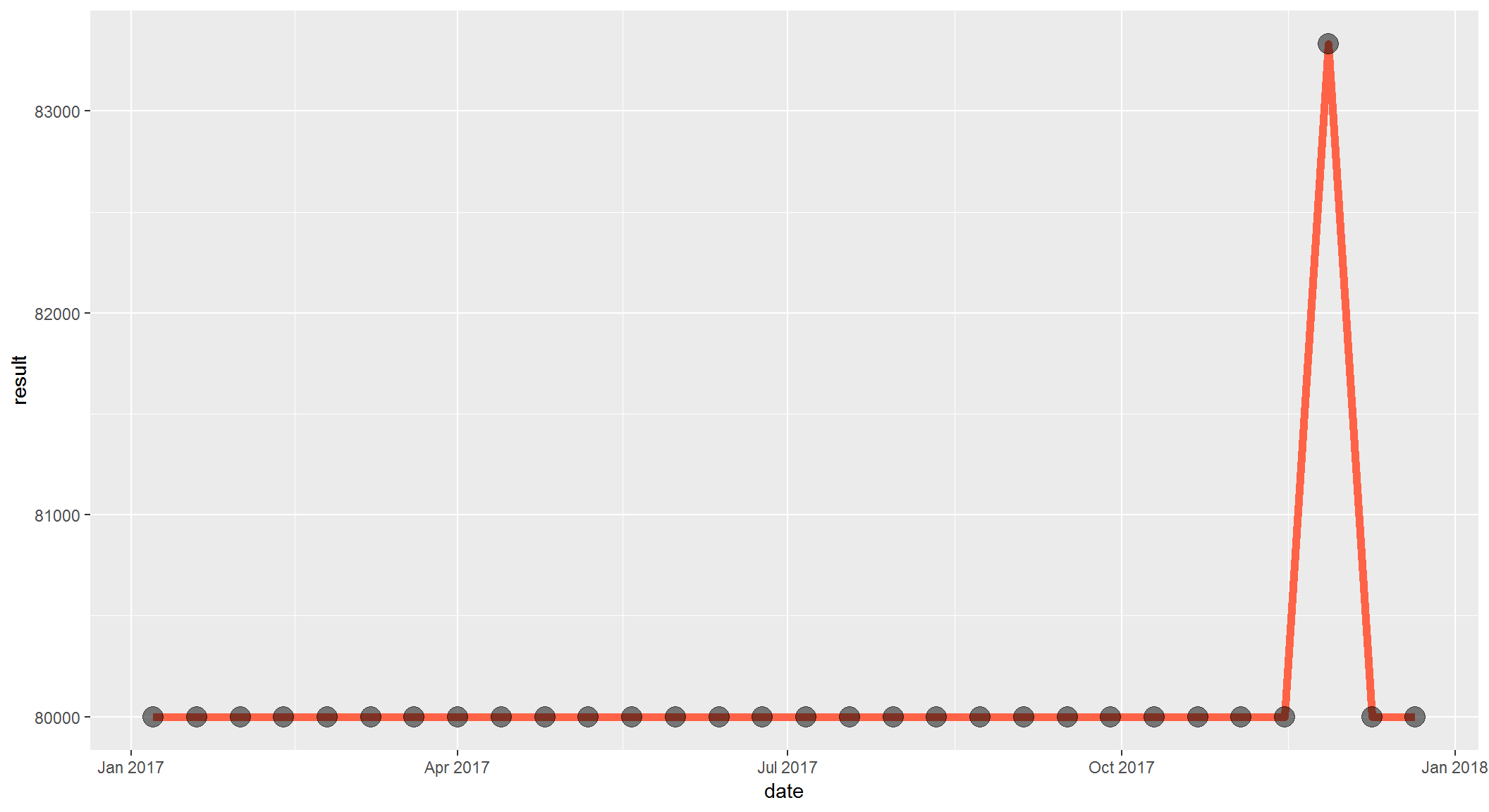

mag_endor <- filter(mag_endor, year == "2017")Time series

ggplot(mag_endor, aes(x = date, y = result)) +

geom_line(size = 2, color = "tomato") +

geom_point(size = 5, alpha = 0.5) # alpha changes transparency

Looks like the measurements definitely picked up a signal towards the end of the year. Let’s make a different chart to show when this occurred.

Mission complete!

Aha! There really was a spike in the magnetic field in November. Hopefully, the Resistance will reward us handsomely for this information.

2 | The pipe operator

I wish I could type the name of the data frame less often.

Now you can!

We use the %>% to chain functions together and make our scripts more streamlined. We call it the pipe.

Here are 2 examples of how the %>% operator is helpful.

#1: It eliminates the need for nested parentheses.

Say you wanted to take the sum of 3 numbers and then take the log and then round the final result.

round(log(sum(c(10,20,30,40))))The code doesn’t look much like the words we used to describe it. Let’s pipe it so we can read the code from left to right.

c(10,20,30,50) %>% sum() %>% log10() %>% round()#2: We can combine many processing steps into one cohesive chunk.

Here are some of the functions we’ve applied to the scrap data so far:

scrap <- arrange(scrap, desc(price_per_pound))

scrap <- filter(scrap, origin != "All")

scrap <- mutate(scrap,

scrap_finder = "BB8",

measure_method = "REM-24")We can use the %>% operator and write it this way.

scrap <- scrap %>%

arrange(desc(price_per_pound)) %>%

filter(origin != "All") %>%

mutate(scrap_finder = "BB8",

measure_method = "REM-24")EXERCISE

Similar to above, use the %>% to combine the two Endor lines below.

# Filter to only magnetic_field measurements

mag_endor <- filter(air_endor, analyte == "magnetic_field")

# Add a date column to the filtered data

mag_endor <- mutate(mag_endor, date = mdy(start_run_date))So where were we?

Oh right, we we’re enjoying our time on beautiful lush Endor. Aren’t we missing somebody…?

| Finn needs us!

That’s enough scuttlebutting around on Endor, Finn needs us back on Jakku. It turns out we forgot to pick-up Finn when we left. Now he’s being held ransom by Junk Boss Plutt. We’ll need to act fast to get to him before the Empire does. Blast off!

More data

Update from BB8!

BB8 was busy on our flight back to Jakku, and was able to recover a full set of scrap records from the notorious Unkar Plutt. Let’s take a look.

library(readr)

library(dplyr)

# Read in the full scrap database

scrap <- read_csv("https://itep-r.netlify.com/data/starwars_scrap_jakku_full.csv")3 | Jakku re-visited

Okay, so we’re back on this ol’ dust bucket. Let’s try not to forget anything this time. We’re quickly running out of friends on this planet.

A scrappy ransom

Mr. Baddy Plutt is demanding 10,000 items of scrap for Finn. Sounds expensive, but lucky for us he didn’t clarify the exact items. Let’s find the scrap that weighs the least per shipment and try to make this transaction as light as possible.

Take a look at our NEW scrap data and see if we have the weight of all the items.

# What unit types are in the data?

unique(scrap$units)## [1] "Cubic Yards" "Items" "Tons"Or return results as a data frame

distinct(scrap, units)## # A tibble: 3 x 1

## units

## <chr>

## 1 Cubic Yards

## 2 Items

## 3 TonsHmmm…. So how much does a cubic yard of Hull Panel weigh?

A lot? 32? Maybe…

I think we’re going to need some more data.

“Hey BB8!”

“Do your magic data finding thing.”

Weight conversion

It took a while, but with a few droid bribes BB8 was able to track down a Weight conversion table from his old droid buddies. Our current data shows the total cubic yards for some scrap shipments, but not how much the shipment weighs.

Read the weight conversion table

# The data's URL

convert_url <- "https://rtrain.netlify.com/data/conversion_table.csv"

# Read the conversion data

convert <- read_csv(convert_url)

head(convert, 3)## # A tibble: 3 x 3

## item units pounds_per_unit

## <chr> <chr> <dbl>

## 1 Bulkhead Cubic Yards 321

## 2 Hull Panel Cubic Yards 641

## 3 Repulsorlift array Cubic Yards 467Stars! A cubic yard of Hull Panel weighs 641 lbs. That’s what I thought!

Let’s join this new conversion table to the scrap data to make our calculations easier. To do that we need to make a new friend.

Say “Hello” to left_join()!

4 | Join tables

left_join() works like a zipper and combines two tables based on one or more variables. It’s called “left”-join because the entire table on the left side is retained. Anything that matches from the right table gets to join the party, but the rest will be ignored.

Join 2 tables

left_join(table1, table2, by = c("columns to join by"))

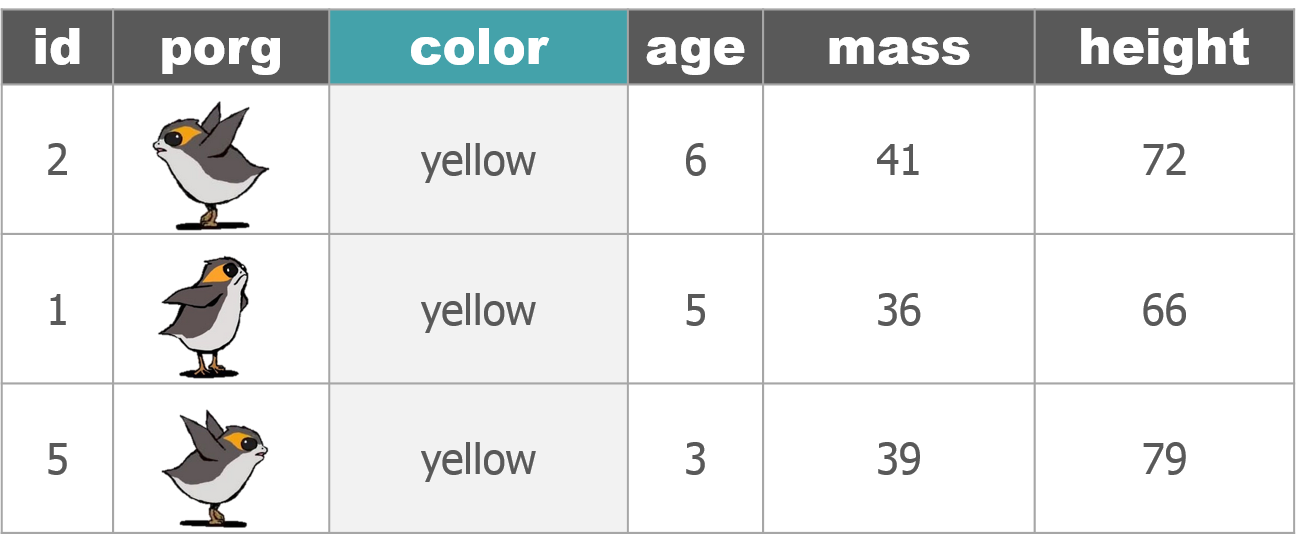

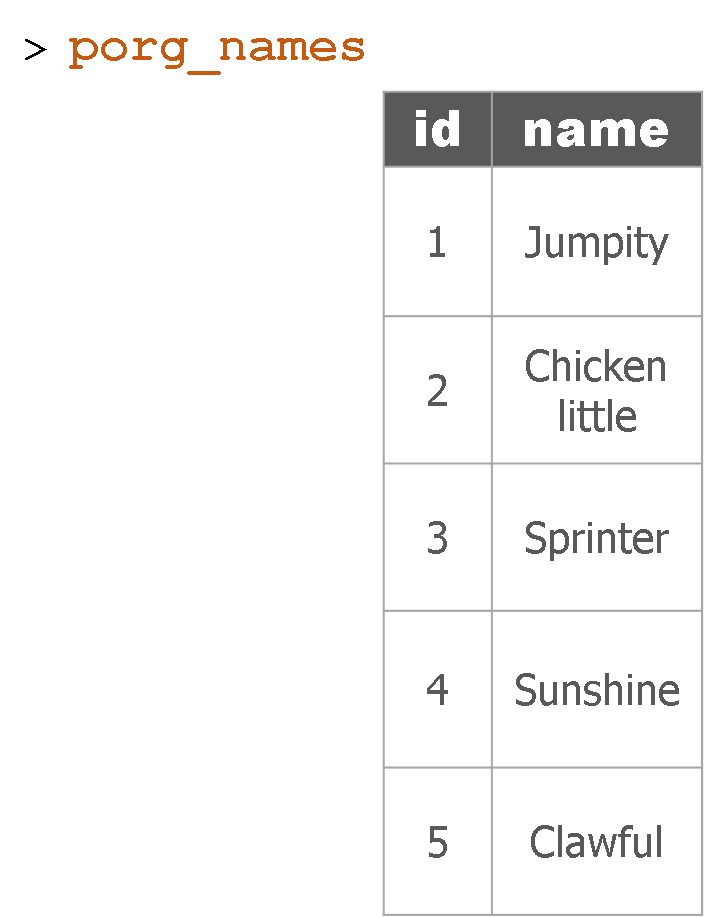

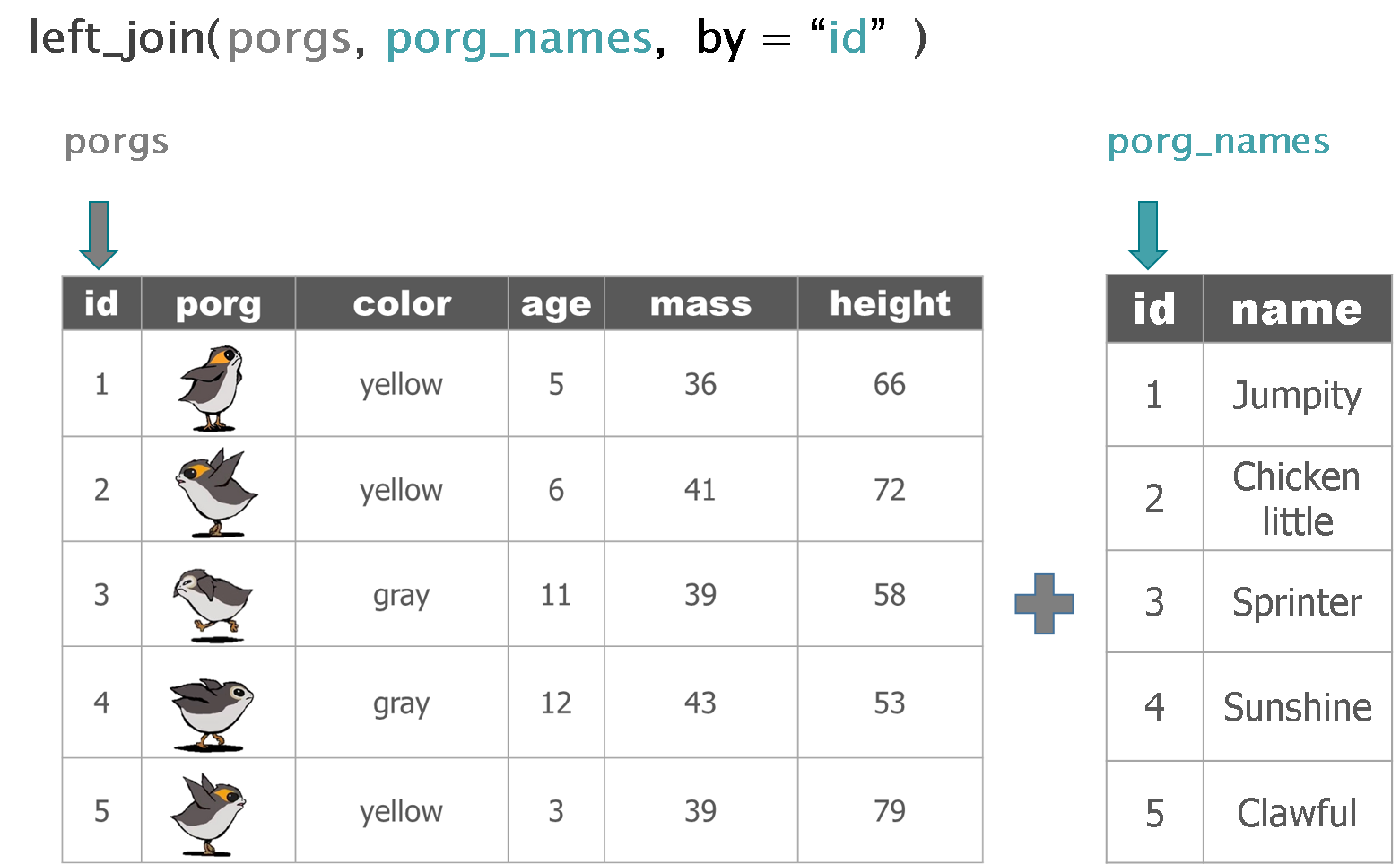

Adding porg names

Remember our porg friends? How rude of us not to share their names. Wups!

Here they are:

Hey now! That’s not very helpful. Who’s who? Let’s join their names to the rest their data.

The result

Back to scrap land

Let’s apply our new left_join() skills to the scrap data.

Join the conversion table to the scrap

Look at the tables. What columns in both tables do we want to join by?

scrap <- left_join(scrap, convert,

by = c("item" = "item", "units" = "units"))Want to skimp on typing?

When the 2 tables share column names that are the same, left_join() will automatically search for matching columns. Nice! So the code below does the same as above.

scrap <- left_join(scrap, convert)

head(scrap, 4)## # A tibble: 4 x 8

## receipt_date item origin destination amount units price_per_pound

## <chr> <chr> <chr> <chr> <dbl> <chr> <dbl>

## 1 4/1/2013 Bulk~ Crate~ Raiders 4017 Cubi~ 1005.

## 2 4/2/2013 Star~ Outsk~ Trade cara~ 1249 Cubi~ 1129.

## 3 4/3/2013 Star~ Outsk~ Niima Outp~ 4434 Cubi~ 1129.

## 4 4/4/2013 Hull~ Crate~ Raiders 286 Cubi~ 843.

## # ... with 1 more variable: pounds_per_unit <dbl>Want more details?

You can type ?left_join to see all the arguments and options.

Total pounds per shipment

Let’s mutate()!

Now that we have pounds per unit we can use mutate to calculate the total pounds for each shipment.

Fill in the blank

scrap <- scrap %>%

mutate(total_pounds = amount * _____________ )Show code

scrap <- scrap %>%

mutate(total_pounds = amount * pounds_per_unit)Total price per shipment

EXERCISE

Total price

We need to do some serious multiplication. We now have the total amount shipped in pounds, and the price per pound, but we want to know the total price for each transaction.

How do we calculate that?

# Calculate the total price for each shipment

scrap <- scrap %>%

mutate(credits = ________ * ________ )

Total price

We need to do some serious multiplication. We now have the total amount shipped in pounds, and the price per pound, but we want to know the total price for each transaction.

How do we calculate that?

# Calculate the total price for each shipment

scrap <- scrap %>%

mutate(credits = total_pounds * ________ )

Total price

We need to do some serious multiplication. We now have the total amount shipped in pounds, and the price per pound, but we want to know the total price for each transaction.

How do we calculate that?

# Calculate the total price for each shipment

scrap <- scrap %>%

mutate(credits = total_pounds * price_per_pound)

Price per item

Great! Let’s add one last column. We can divide the shipment’s credits by the amount of items to get the price_per_unit.

# Calculate the price per unit

scrap <- scrap %>%

mutate(price_per_unit = credits / amount)Data analysts often get asked summary questions such as:

- What’s the highest or worst?

- What’s the lowest number?

- Is that worse than average?

- What’s the average tonnage of scrap from Cratertown this year?

- What city is making the most money?

On to summarize()!

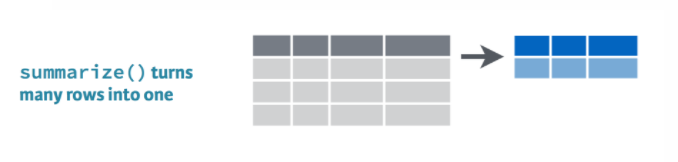

5 | summarize() this!

summarize() allows you to apply a summary function like median() to a column and collapse your data down to a single row. To really dig into summarize you’ll want to know some common summary functions, such as sum(), mean(), median(), min(), and max().

sum()

Use summarize() and sum() to find the total credits of all the scrap.

summarize(scrap, total_credits = sum(credits))mean()

Use summarize() and mean() to calculate the mean price_per_pound in the scrap report.

summarize(scrap, mean_price = mean(price_per_pound, na.rm = T))Note the na.rm = TRUE in the mean() function. This tells R to ignore empty cells or missing values that show up in R as NA. If you leave na.rm out, the mean function will return ‘NA’ if it finds a missing value in the data.

median()

Use summarize to calculate the median price_per_pound in the scrap report.

summarize(scrap, median_price = median(price_per_pound, na.rm = T))max()

Use summarize to calculate the maximum price per pound any scrapper got for their scrap.

summarize(scrap, max_price = max(price_per_pound, na.rm = T))min()

Use summarize to calculate the minimum price per pound any scrapper got for their scrap.

summarize(scrap, min_price = min(price_per_pound, na.rm = T))nth()

Use summarize() and nth(Origin, 12) to find the name of the Origin City that had the 12th highest scrapper haul.

Hint: Use arrange() first.

arrange(scrap, desc(credits)) %>% summarize(price_12 = nth(origin, 12))quantile()

Quantiles are great for finding the upper or lower range of a column. Use the quantile() function to find the the 5th and 95th quantile of the prices.

summarize(scrap,

price_5th_pctile = quantile(price_per_pound, 0.05, na.rm = T),

price_95th_pctile = quantile(price_per_pound, 0.95))Hint: Add na.rm = T to quantile().

EXERCISE

Create a summary of the scrap data that includes 3 of the summary functions above. The following is one example.

summary <- scrap %>%

summarize(max_credits = __________ ,

mean_credits = __________ ,

min_pounds = __________ )n()

n() stands for count. It has the smallest function name I know of, but is super useful.

Use summarize and n() to count the number of reported scrap records going to Niima outpost.

Hint: Use filter() first.

niima_scrap <- filter(scrap, destination == "Niima Outpost") niima_scrap <- summarize(niima_scrap, scrap_records = n())Ok. That was fun. Now let’s do a summary for Cratertown. And then for Blowback Town. And then for Tuanul. And then for…

Wait!

Do we really need to

filterto the origin city that we’re interested in every single time?How about you just give me the mean price for every origin city. Then I could use that to answer a question about any city I want.

Okay. Fine. It’s time we talk about

group_by().

6 | group_by()

The junk Capital of Jakku

Which origin city had the most shipments of junk?

Use group_by with the column origin, and then usesummarize to count the number of records at each origin city.

Fill in the blank

scrap_shipments <- group_by(scrap, ______ ) %>%

summarize(shipments = ______ ) Show code

scrap_shipments <- group_by(scrap, origin ) %>%

summarize(shipments = n() ) Pop Quiz!

Which city had the most scrap shipments?

Tuanul

Outskirts

Reestki

Cratertown

Show solution

Cratertown

You’ve got the POWER!

Bargain hunters

Who’s selling goods for cheap?

Use group_by with the column origin, and then usesummarize to find the mean(price_per_unit) at each origin city.

Show code

mean_prices <- group_by(scrap, origin) %>%

summarize(mean_price = mean(price_per_unit, na.rm = T)) %>%

ungroup() EXPLORE: Rounding digits

You can round the prices to a certain number of digits using the round() function. We can finish by adding the arrange() function to sort the table by our new column.

mean_prices <- group_by(scrap, origin) %>%

summarize(mean_price = mean(price_per_unit, na.rm = T),

mean_price_round = round(mean_price, digits = 2)) %>%

arrange(mean_price_round) %>%

ungroup()Pro-tip!

Ending with ungroup() is good practice. This prevents your data from staying grouped after the summarizing has been completed.

7 | Save files

Let’s save the mean price summary table we created to a CSV. That way we can transfer it to a droid courier for delivery to Rey. To save a data frame we use the write_csv() function from our favorite readr package.

# Write the file to your results folder

write_csv(mean_prices, "results/prices_by_origin.csv")WARNING!

By default, when saving files R will overwrite a file if the file already exists in the same folder. It will not ask for confirmation. To be safe, save processed data to a new folder called results/ and not to your raw data/ folder.